-

Notifications

You must be signed in to change notification settings - Fork 0

Home

(Image by Author: Gina Acosta Gutiérrez)

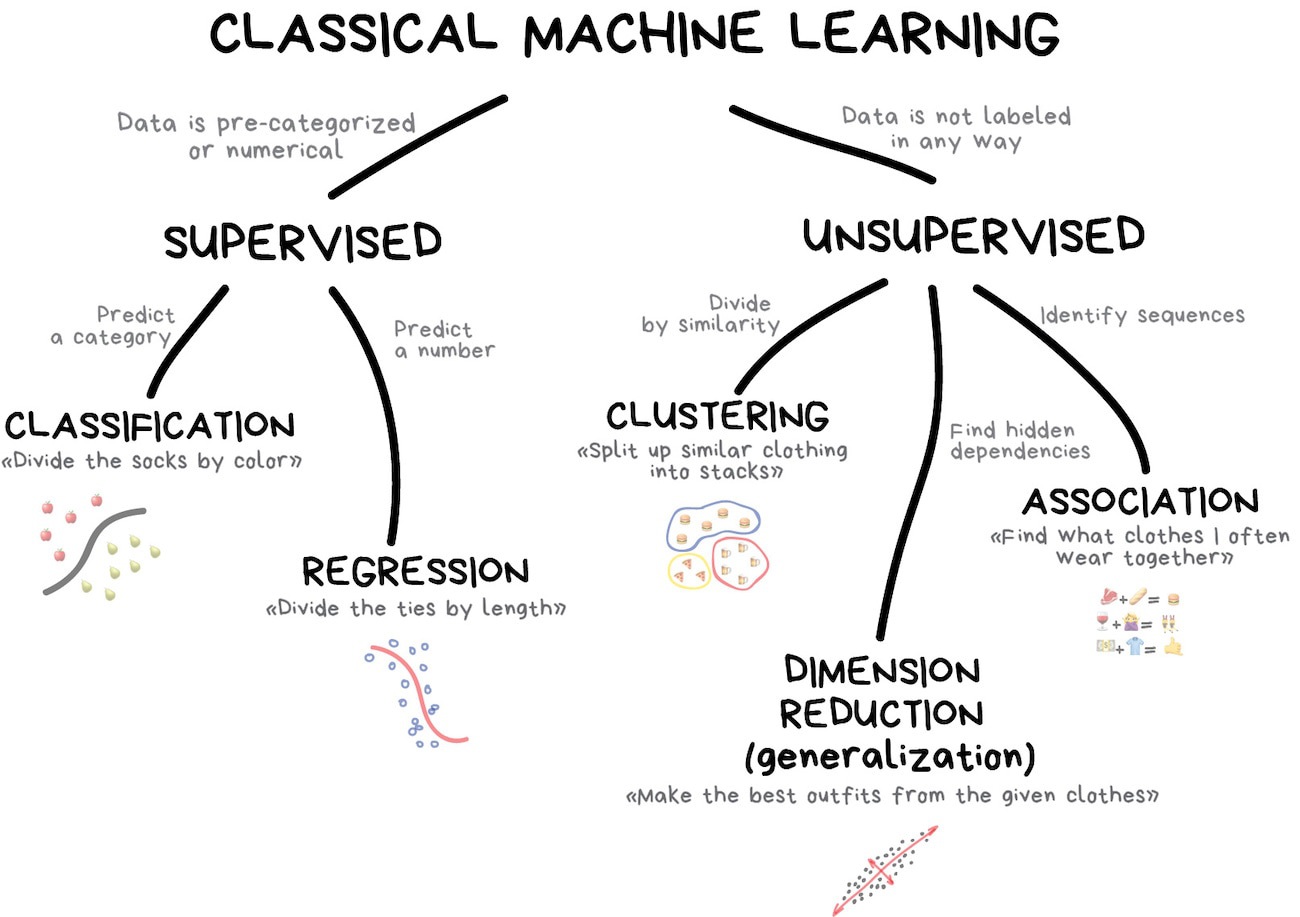

In the field of Machine Learning three main paradigms are used: supervised, unsupervised, and reinforcement learning, which differ in their tasks and data presentation. Supervised Learning is the most common task used. They can be combined together to obtain better results.

(Image credit: Vas3k's Blog)

Supervised learning algorithms are trained on a dataset that includes both input and output values. The algorithm is trained to learn to map the input values to the output values. For example, a supervised learning algorithm could be trained to predict the price of a house based on its features, such as the number of bedrooms, the square footage, and the location.

Some of the most common supervised learning algorithms include:

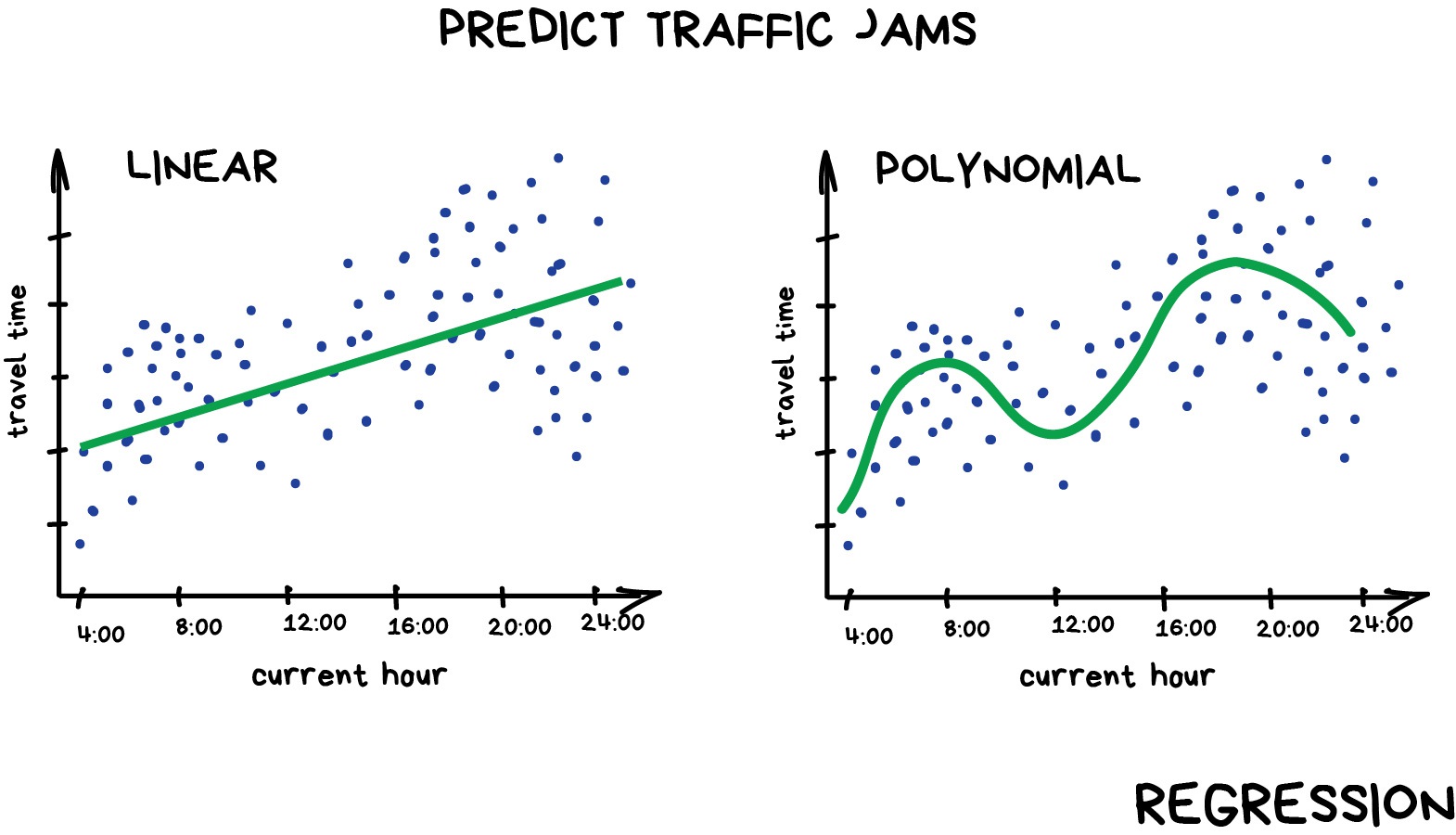

- Linear regression: A simple algorithm that predicts a continuous value based on a set of input values (Regression).

(Image credit: Vas3k's Blog)

- Logistic regression: Is a statistical model algorithm that models the probability of a categorical value event based on a set of input values (Classification).

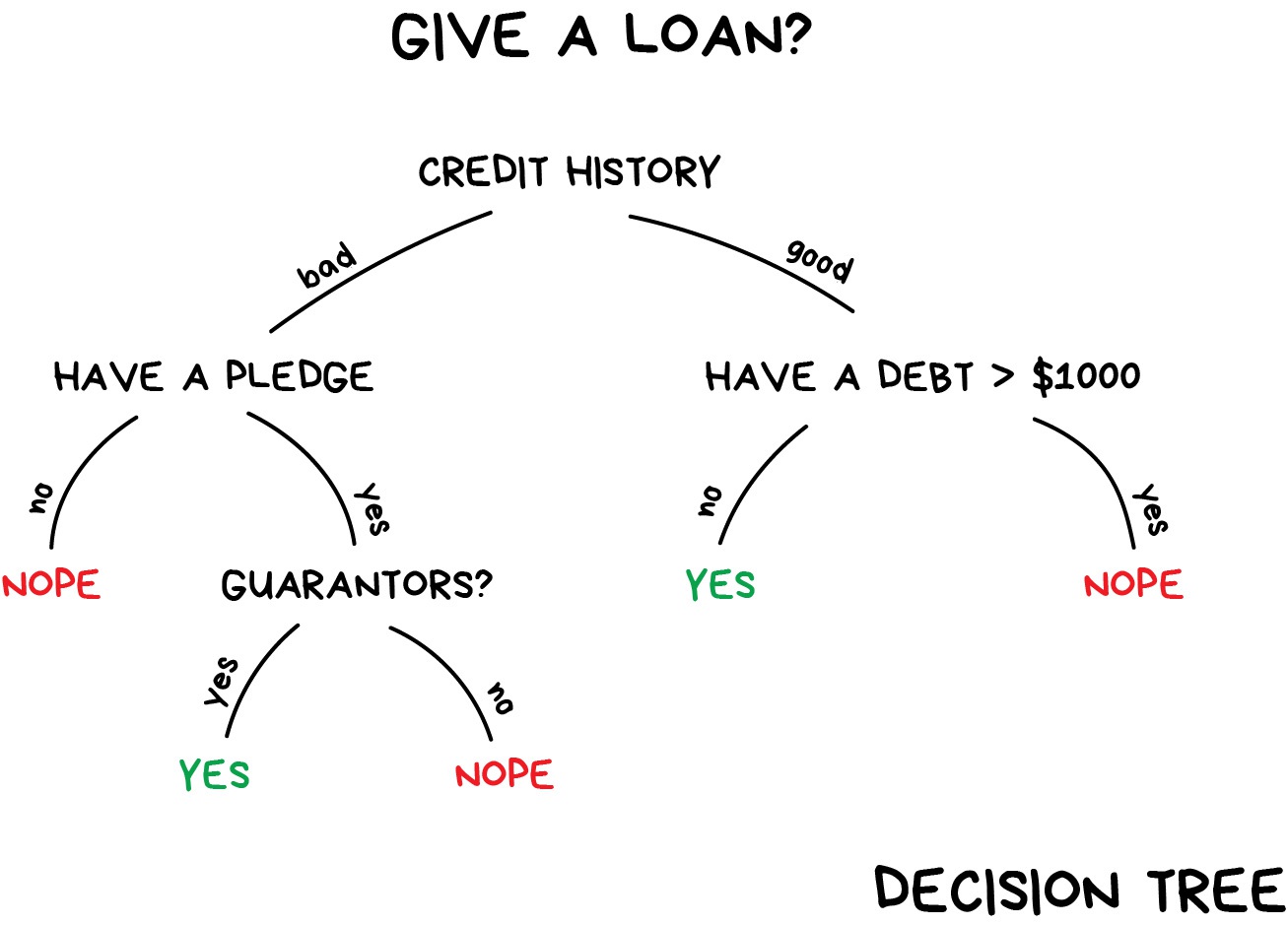

- Decision trees: A more powerful algorithm that can be used to classify or predict both continuous and categorical values.

(Image credit: Vas3k's Blog)

- Random Forest: Also known as random decision forests, is an ensemble learning method for classification and regression.

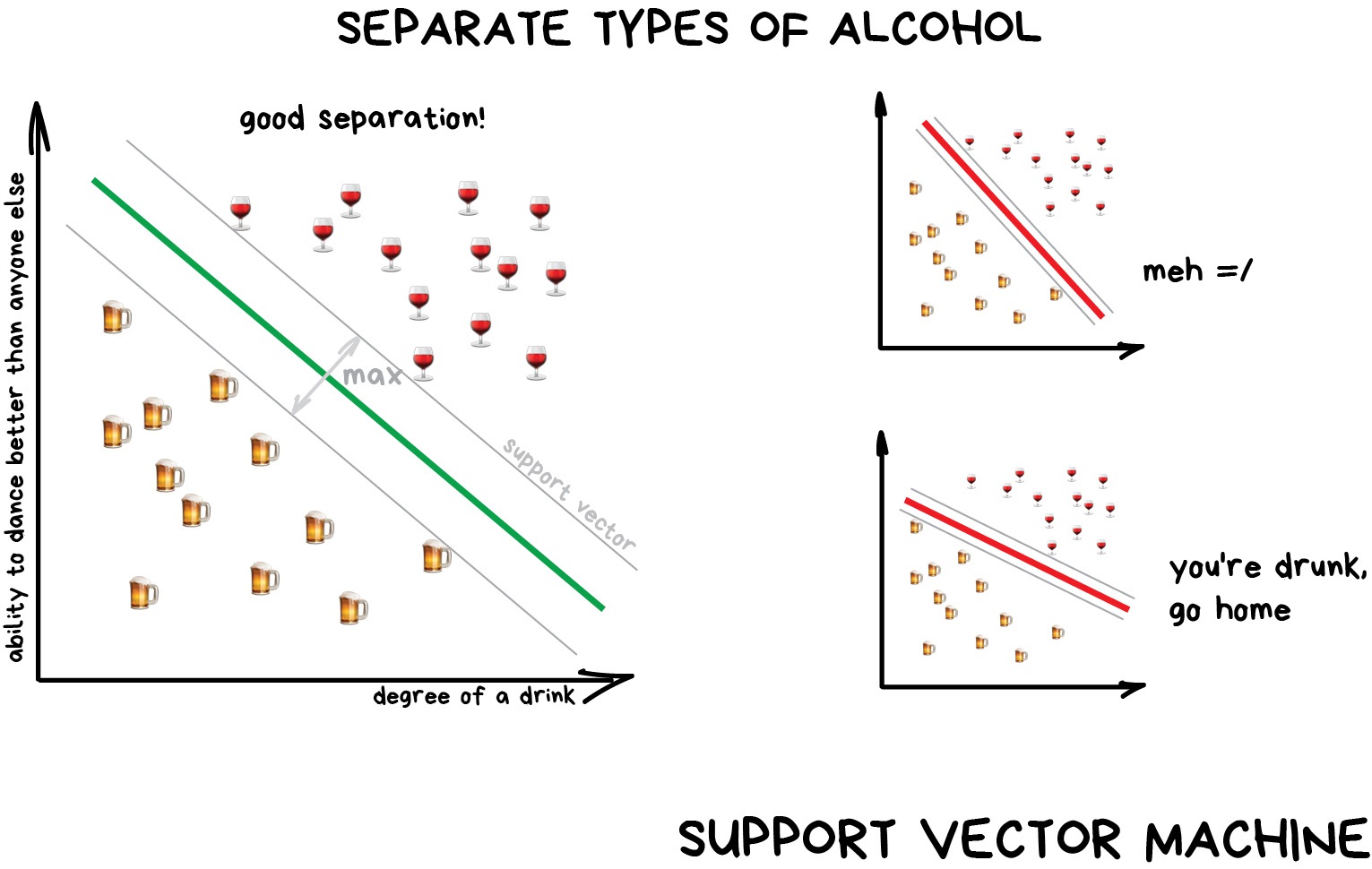

- Support vector machines (SVMs): SVMs is a versatile and robust algorithm that can be used for both classification and regression tasks.

(Image credit: Vas3k's Blog)

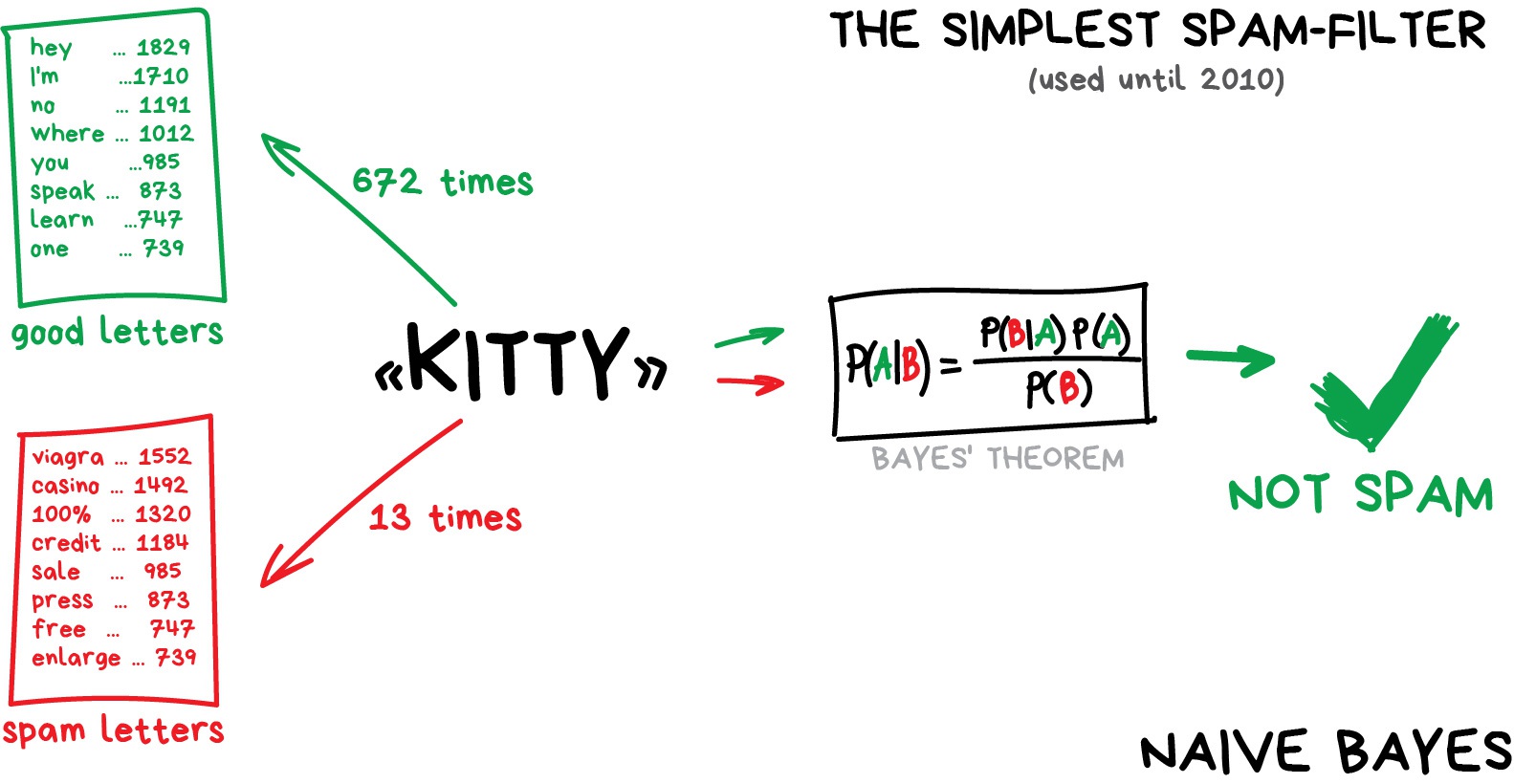

- Naive Bayes classifier: A simple but effective algorithm for classification tasks.

(Image credit: Vas3k's Blog)

- k-Nearest Neighbors: Is an algorithm used in both classification and regression tasks.

- Artificial Neural Networks: Is an interconnected group of nodes, inspired by a simplification of neurons in a brain. They are widely used in classification of images for example.

Unsupervised learning algorithms are trained on a dataset that only includes input values. The algorithm learns to find patterns in the data without any guidance from labeled output values. For example, an unsupervised learning algorithm could be used to cluster customers into groups based on their spending habits.

Some of the most common unsupervised learning algorithms include:

- Principal component analysis (PCA): PCA is a dimensionality reduction algorithm that reduces the number of features in a dataset while preserving as much information as possible.

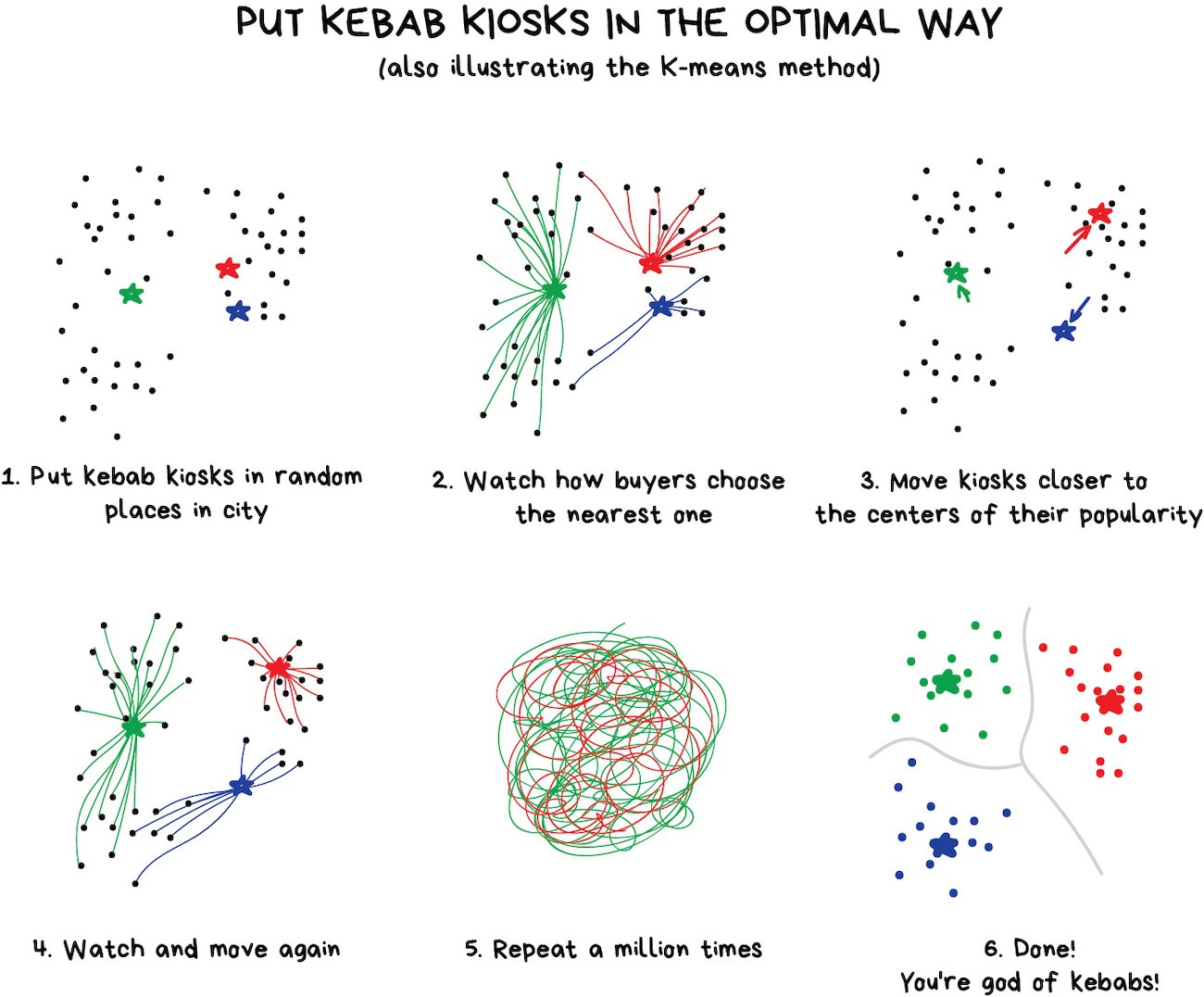

- K-means clustering: K-means [clustering] (https://en.wikipedia.org/wiki/Cluster_analysis) is an algorithm that groups similar data points together.

(Image credit: Vas3k's Blog)

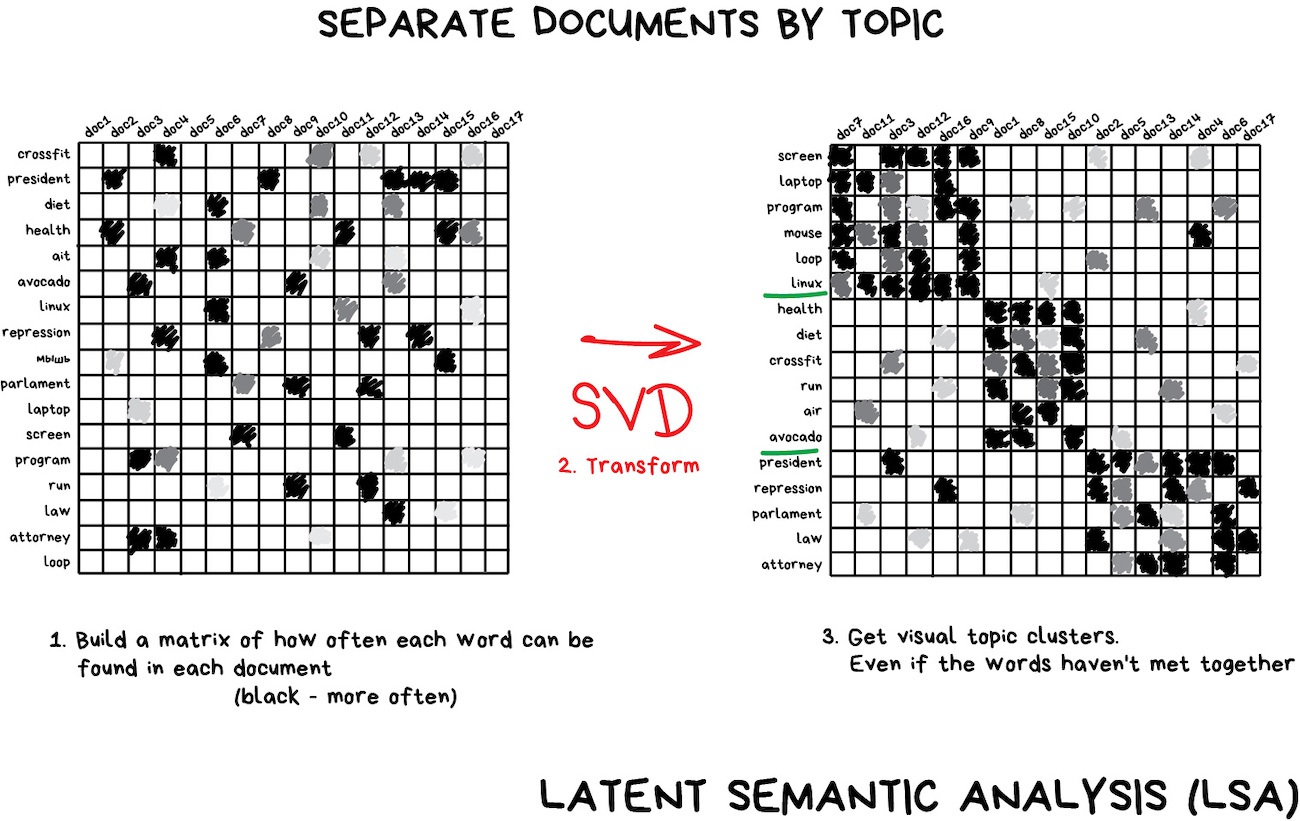

- Latent Semantic Analysis, is a natural language processing method that analyzes relationships between a set of documents and the terms contained within.

(Image credit: Vas3k's Blog)

- Hierarchical clustering: Hierarchical clustering is an algorithm that creates a hierarchy of clusters.

- Gaussian mixture models (GMMs): GMMs are a probabilistic model that can be used to cluster data.

- DBSCAN: The Density-based spatial clustering of applications with noise is one of the most common clustering algorithms used.

- Association Rule Learning: Is a rule-based machine learning for discovering relations between variables in large databases. Examples of these type of algorithms are the Apriori algorithm and the Eclat algorithm.

Reinforcement learning algorithms learn to make decisions in an environment by trial and error. The algorithm is given a reward for taking actions that lead to a desired outcome. For example, a reinforcement learning algorithm could be used to train a robot to play a game of Pong. The robot would be given a reward for hitting the ball and a penalty for missing the ball. Over time, the robot would learn how to play the game by trial and error.

Some of the most common reinforcement learning algorithms include:

- Q-learning: An algorithm that learns to map states to actions that maximize the expected reward.

- Policy gradient methods: Policy gradient methods learn to directly map states to actions.

- Actor-critic methods: Actor-critic methods combine Q-learning and policy gradient methods.

There are many other machine learning algorithms that do not fit neatly into the categories of supervised learning, unsupervised learning, or reinforcement learning. Some of these algorithms include:

- Semi-supervised Learning or Weak Supervision: These type of algorithms combine a small amount of labeled data with a large amount of unlabeled data during training. An example of these types of algorithms is the Label propagation algorithm.

-

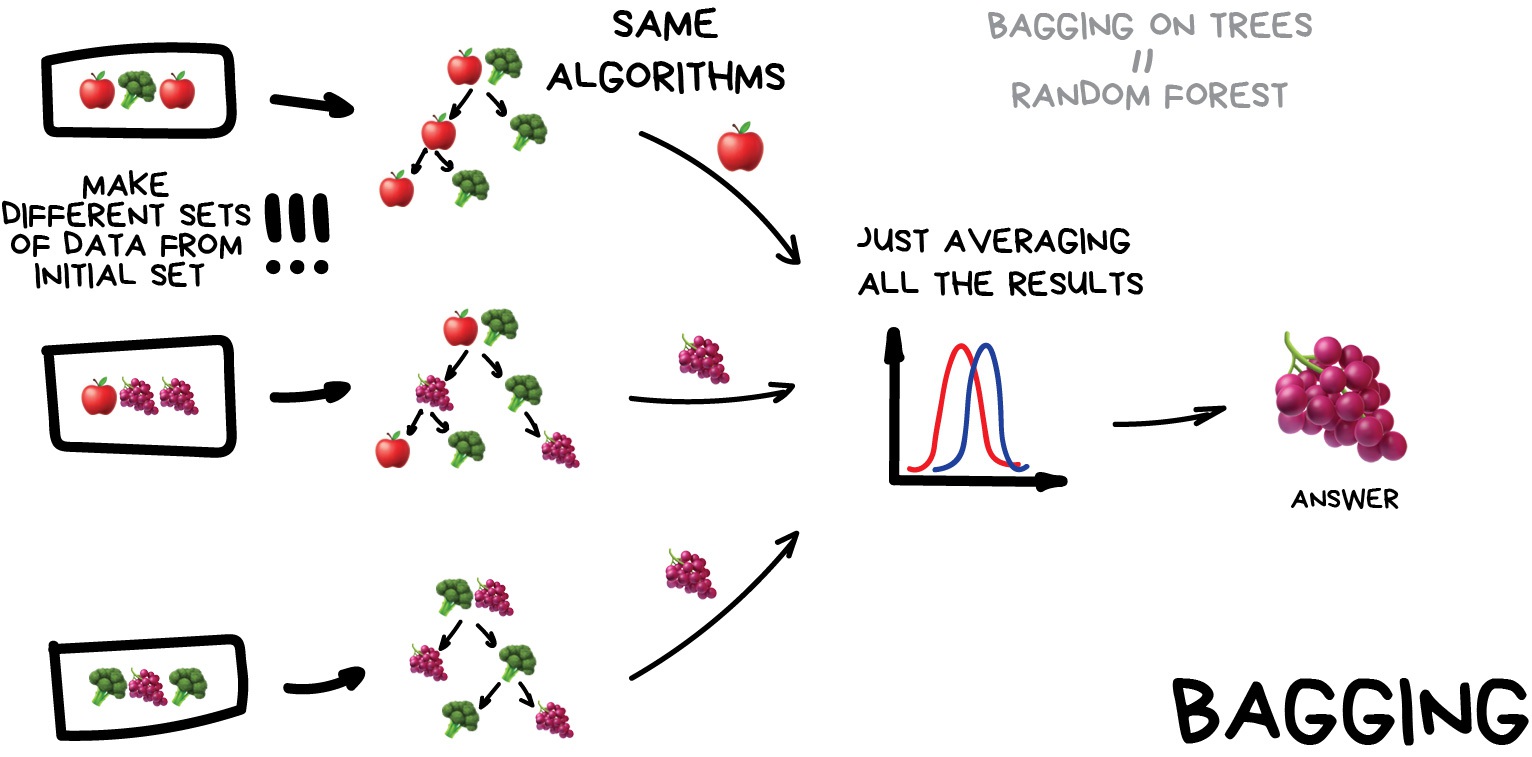

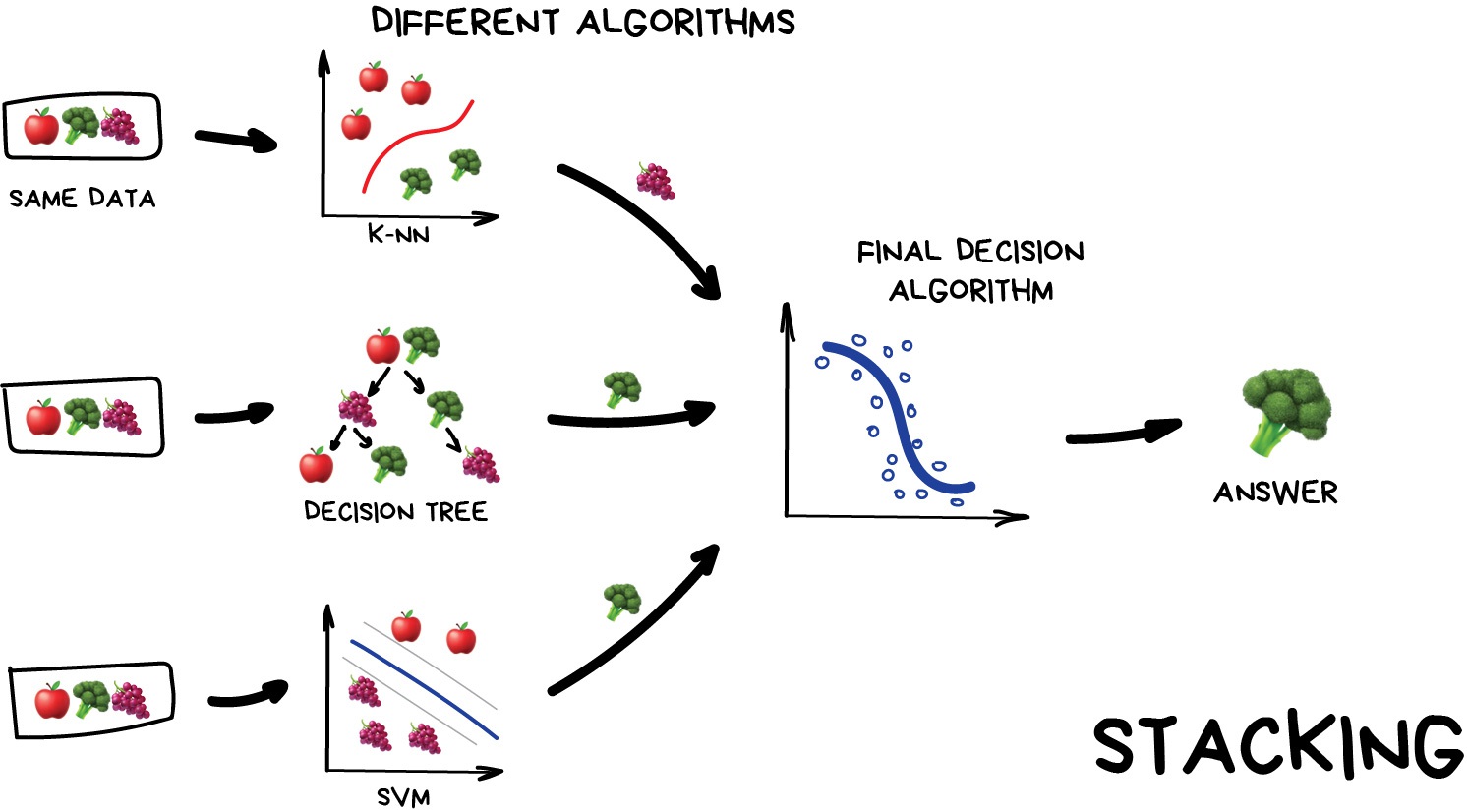

Ensemble Learning: A concrete set of learning algorithms is used to obtain better prediction performance. Some common types of ensembles are:

- Bootstrap aggregating (bagging). Bootstrap aggregation (bagging) involves training an ensemble on bootstrapped data sets. A bootstrapped set is created by selecting from the original training data set with replacement.

(Image credit: Vas3k's Blog)

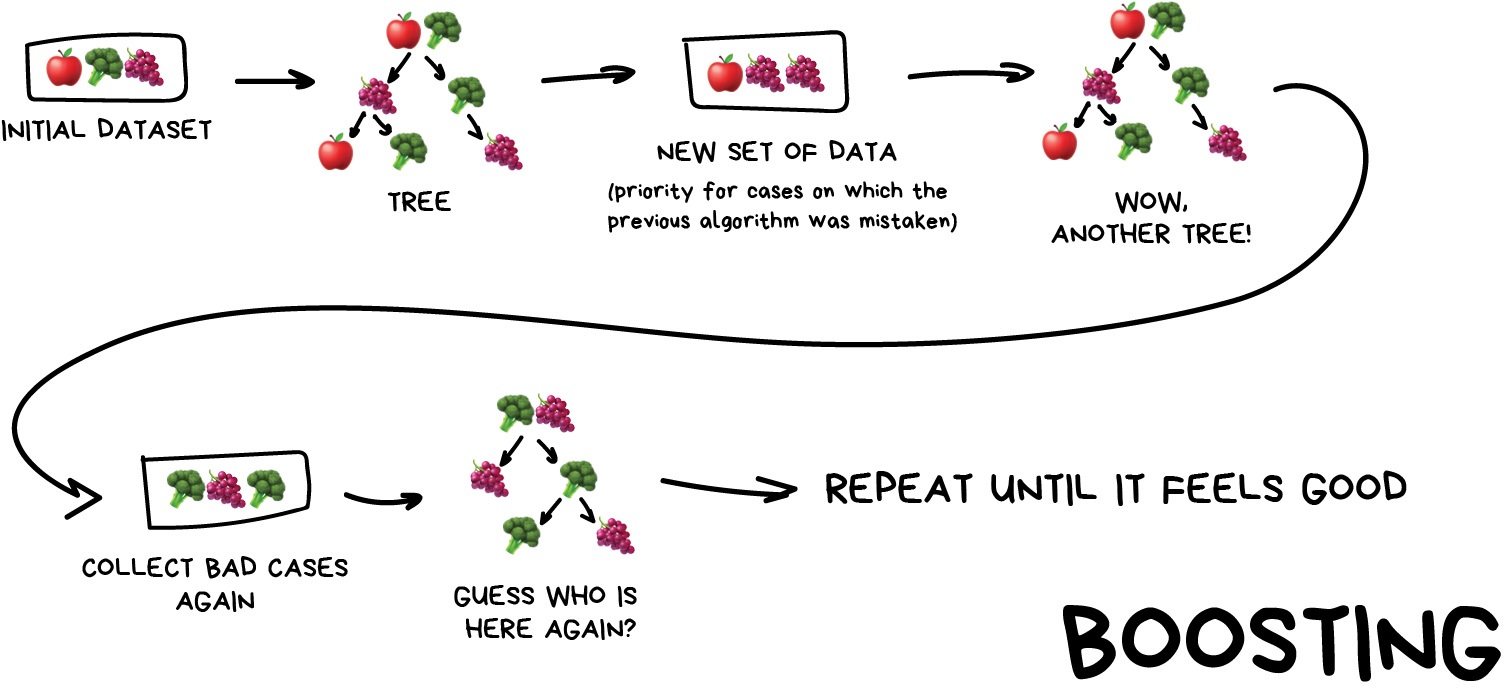

- Boosting. Boosting involves training successive models by emphasizing training data misclassified by previously learned models.

(Image credit: Vas3k's Blog)

- Voting. Used to construct a compound algorithm from a pool of prediction algorithms.

(Image credit: Vas3k's Blog)

-

Dimensionality reduction: It is the transformation of data from a high-dimensional space into a low-dimensional space so that the low-dimensional representation retains some meaningful properties of the original data. Examples of these types of algorithms are:

- Principal Component Analysis (PCA). It is a statistical technique for reducing the dimensionality of a dataset. It is accomplished by transforming the data into a new coordinate system, that preserves most of the variability of the original data.

- Linear Discriminant Analysis. It is very closely related to the PCA, since it looks for linear combinations of variables that best explain the original data.

- t-distributed Stochastic Neighbor Embedding (t-SNE). It is a statistical method for visualizing high-dimensional data in a low-dimensional space of two or three dimensions.

- Natural language processing (NLP) algorithms: NLP algorithms are used to process and understand natural language.

- Computer vision algorithms: Computer vision algorithms are used to acquire, process, analyze and understand digital images and videos, to extract high-dimensional data from the real world in order to produce numerical or symbolic information in the form of decisions.

- Speech recognition algorithms: Speech recognition algorithms are used to convert spoken language into text. The reverse process is known as speech synthesis, which is the artificial production of human speech.

- Recommendation systems: Recommendation systems are used to recommend products or services that are pertinent to a particular user.

These are just a small list of the many machine learning algorithms that are available. The best algorithm for a particular task will depend on the specific requirements of the task.

The Scikit-learn library includes a collection of algorithms in Python for building Machine Learning models that are used to make predictions.

Classical Machine Learning refers to three paradigms of learning processes: Supervised Learning, Unsupervised Learning and Reinforcement Learning.

- Identify the Machine Learning modeling process.

- Classify the main Classification algorithms, for identifying categories of objects.

- Describe the main Regression algorithms, for predicting numerical values.

- Identify the main Clustering algorithms, for grouping objects automatically.

- Recognize the main Dimensionality reduction algorithms, for reducing the number of variables in a multidimensional experiment.

- Intro to Scikit-Learn

- Supervised Learning: Regression

- Supervised Learning: Classification

- Unsupervised Learning: Dimensionality Reduction

- Unsupervised Learning: Clustering

- Ensemble Learning: Bagging

- Ensemble Learning: Boosting

- Reinforcement Learning

- Perceptrons

- Convolutional Neural Networks (CNN)

- Recurrent Neural Networks (RNN)

- Generative Adversarial Networks (GAN)

- Autoencoders

- LLMs

- Machine Learning Glossary. Google Developers.

- Cheat Sheets for Machine Learning and Data Science. Aqeel Anwar.

- Machine Learning for Everyone. Vask3's Blog.

- Google Machine Learning Education

- Machine Learning Online Resources. UA Data Science Institute.

Created: 01-16-2023 (C. Lizárraga); Updated: 01-18-2024 (C. Lizárraga).

UArizona DataLab, Data Science Institute, 2024