📘Documentation | 🛠️Installation | 👀Model Zoo | 🆕Update News | 🤔Reporting Issues

English | 简体中文

- 🥳 🚀 What's New

- 📖 Introduction

- 🛠️ Installation

- 👨🏫 Tutorial

- 📊 Overview of Benchmark and Model Zoo

- ❓ FAQ

- 🙌 Contributing

- 🤝 Acknowledgement

- 🖊️ Citation

- 🎫 License

- 🏗️ Projects in OpenMMLab

🥳 🚀 What's New 🔝

💎 v0.5.0 was released on 2/3/2023:

- Support RTMDet-R rotated object detection

- Support for using mask annotation to improve YOLOv8 object detection performance

- Support MMRazor searchable NAS sub-network as the backbone of YOLO series algorithm

- Support calling MMRazor to distill the knowledge of RTMDet

- MMYOLO document structure optimization, comprehensive content upgrade

- Improve YOLOX mAP and training speed based on RTMDet training hyperparameters

- Support calculation of model parameters and FLOPs, provide GPU latency data on T4 devices, and update Model Zoo

- Support test-time augmentation (TTA)

- Support RTMDet, YOLOv8 and YOLOv7 assigner visualization

For release history and update details, please refer to changelog.

✨ Highlight 🔝

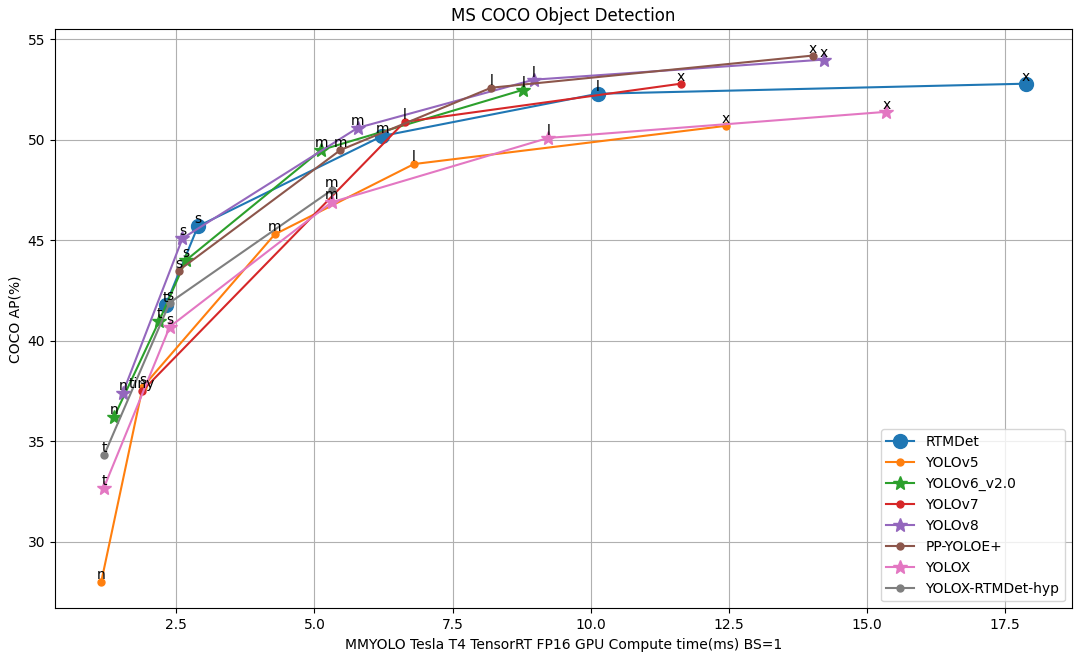

We are excited to announce our latest work on real-time object recognition tasks, RTMDet, a family of fully convolutional single-stage detectors. RTMDet not only achieves the best parameter-accuracy trade-off on object detection from tiny to extra-large model sizes but also obtains new state-of-the-art performance on instance segmentation and rotated object detection tasks. Details can be found in the technical report. Pre-trained models are here.

| Task | Dataset | AP | FPS(TRT FP16 BS1 3090) |

|---|---|---|---|

| Object Detection | COCO | 52.8 | 322 |

| Instance Segmentation | COCO | 44.6 | 188 |

| Rotated Object Detection | DOTA | 78.9(single-scale)/81.3(multi-scale) | 121 |

MMYOLO currently implements the object detection and rotated object detection algorithm, but it has a significant training acceleration compared to the MMDeteciton version. The training speed is 2.6 times faster than the previous version.

📖 Introduction 🔝

MMYOLO is an open source toolbox for YOLO series algorithms based on PyTorch and MMDetection. It is a part of the OpenMMLab project.

The master branch works with PyTorch 1.6+.

Major features

-

🕹️ Unified and convenient benchmark

MMYOLO unifies the implementation of modules in various YOLO algorithms and provides a unified benchmark. Users can compare and analyze in a fair and convenient way.

-

📚 Rich and detailed documentation

MMYOLO provides rich documentation for getting started, model deployment, advanced usages, and algorithm analysis, making it easy for users at different levels to get started and make extensions quickly.

-

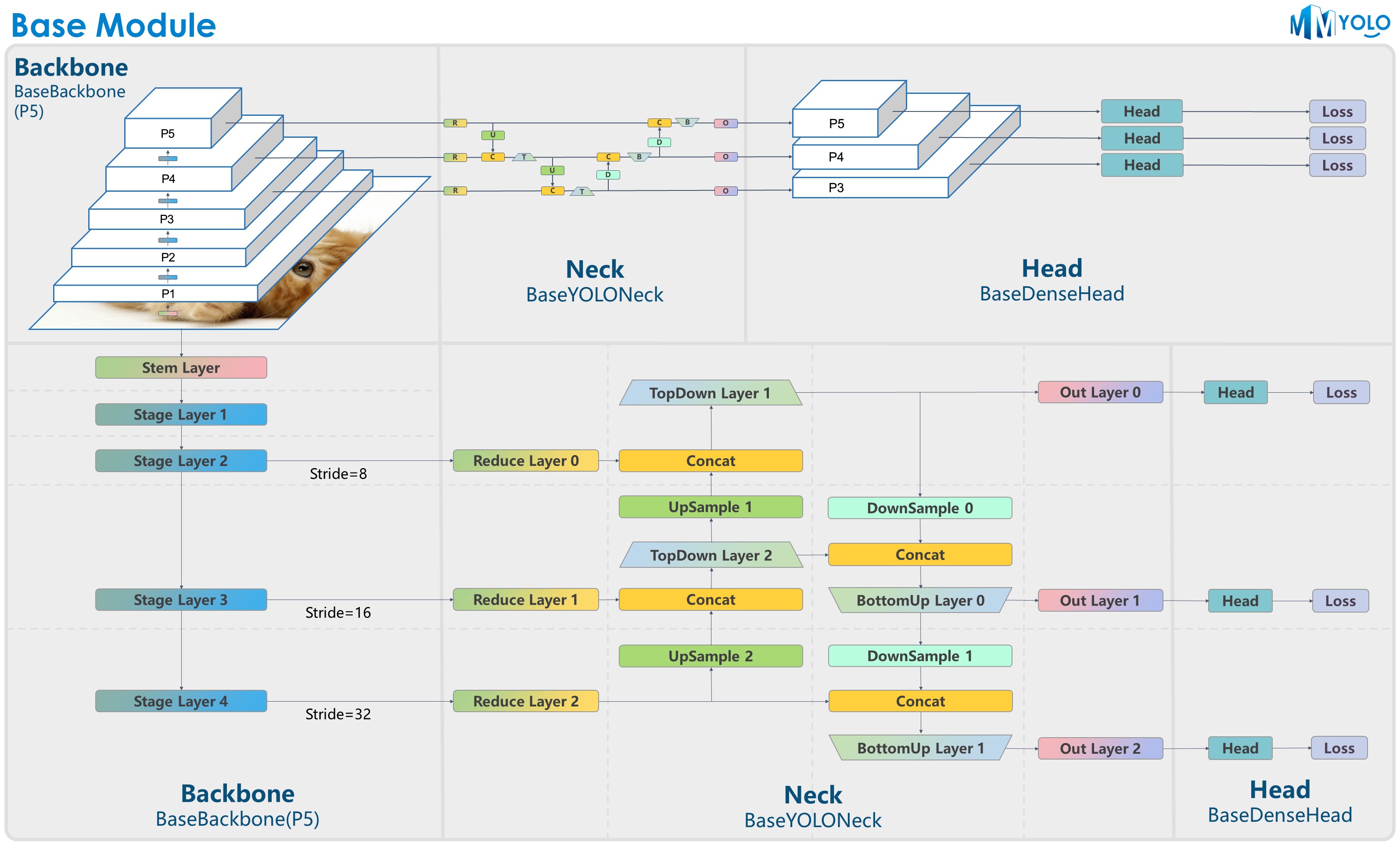

🧩 Modular Design

MMYOLO decomposes the framework into different components where users can easily customize a model by combining different modules with various training and testing strategies.

The figure above is contributed by RangeKing@GitHub, thank you very much!

The figure above is contributed by RangeKing@GitHub, thank you very much!

And the figure of P6 model is in model_design.md.

🛠️ Installation 🔝

MMYOLO relies on PyTorch, MMCV, MMEngine, and MMDetection. Below are quick steps for installation. Please refer to the Install Guide for more detailed instructions.

conda create -n mmyolo python=3.8 pytorch==1.10.1 torchvision==0.11.2 cudatoolkit=11.3 -c pytorch -y

conda activate mmyolo

pip install openmim

mim install "mmengine>=0.6.0"

mim install "mmcv>=2.0.0rc4,<2.1.0"

mim install "mmdet>=3.0.0rc6,<3.1.0"

git clone https://github.com/open-mmlab/mmyolo.git

cd mmyolo

# Install albumentations

pip install -r requirements/albu.txt

# Install MMYOLO

mim install -v -e .👨🏫 Tutorial 🔝

MMYOLO is based on MMDetection and adopts the same code structure and design approach. To get better use of this, please read MMDetection Overview for the first understanding of MMDetection.

The usage of MMYOLO is almost identical to MMDetection and all tutorials are straightforward to use, you can also learn about MMDetection User Guide and Advanced Guide.

For different parts from MMDetection, we have also prepared user guides and advanced guides, please read our documentation.

Get Started

Recommended Topics

- How to contribute code to MMYOLO

- MMYOLO model design

- Algorithm principles and implementation

- Replace the backbone network

- MMYOLO model complexity analysis

- Annotation-to-deployment workflow for custom dataset

- Visualization

- Model deployment

- Troubleshooting steps

- MMYOLO industry examples

- MM series repo essential basics

- Dataset preparation and description

Common Usage

- Resume training

- Enabling and disabling SyncBatchNorm

- Enabling AMP

- TTA Related Notes

- Add plugins to the backbone network

- Freeze layers

- Output model predictions

- Set random seed

- Module combination

- Cross-library calls using mim

- Apply multiple Necks

- Specify specific device training or inference

- Single and multi-channel application examples

Useful Tools

Basic Tutorials

Advanced Tutorials

Descriptions

📊 Overview of Benchmark and Model Zoo 🔝

Results and models are available in the model zoo.

Supported Tasks

- Object detection

- Rotated object detection

Supported Datasets

- COCO Dataset

- VOC Dataset

- CrowdHuman Dataset

- DOTA 1.0 Dataset

| Backbones | Necks | Loss | Common |

|

|

|

|

❓ FAQ 🔝

Please refer to the FAQ for frequently asked questions.

🙌 Contributing 🔝

We appreciate all contributions to improving MMYOLO. Ongoing projects can be found in our GitHub Projects. Welcome community users to participate in these projects. Please refer to CONTRIBUTING.md for the contributing guideline.

🤝 Acknowledgement 🔝

MMYOLO is an open source project that is contributed by researchers and engineers from various colleges and companies. We appreciate all the contributors who implement their methods or add new features, as well as users who give valuable feedback. We wish that the toolbox and benchmark could serve the growing research community by providing a flexible toolkit to re-implement existing methods and develop their own new detectors.

🖊️ Citation 🔝

If you find this project useful in your research, please consider citing:

@misc{mmyolo2022,

title={{MMYOLO: OpenMMLab YOLO} series toolbox and benchmark},

author={MMYOLO Contributors},

howpublished = {\url{https://github.com/open-mmlab/mmyolo}},

year={2022}

}🎫 License 🔝

This project is released under the GPL 3.0 license.

🏗️ Projects in OpenMMLab 🔝

- MMEngine: OpenMMLab foundational library for training deep learning models.

- MMCV: OpenMMLab foundational library for computer vision.

- MIM: MIM installs OpenMMLab packages.

- MMClassification: OpenMMLab image classification toolbox and benchmark.

- MMDetection: OpenMMLab detection toolbox and benchmark.

- MMDetection3D: OpenMMLab's next-generation platform for general 3D object detection.

- MMRotate: OpenMMLab rotated object detection toolbox and benchmark.

- MMYOLO: OpenMMLab YOLO series toolbox and benchmark.

- MMSegmentation: OpenMMLab semantic segmentation toolbox and benchmark.

- MMOCR: OpenMMLab text detection, recognition, and understanding toolbox.

- MMPose: OpenMMLab pose estimation toolbox and benchmark.

- MMHuman3D: OpenMMLab 3D human parametric model toolbox and benchmark.

- MMSelfSup: OpenMMLab self-supervised learning toolbox and benchmark.

- MMRazor: OpenMMLab model compression toolbox and benchmark.

- MMFewShot: OpenMMLab fewshot learning toolbox and benchmark.

- MMAction2: OpenMMLab's next-generation action understanding toolbox and benchmark.

- MMTracking: OpenMMLab video perception toolbox and benchmark.

- MMFlow: OpenMMLab optical flow toolbox and benchmark.

- MMEditing: OpenMMLab image and video editing toolbox.

- MMGeneration: OpenMMLab image and video generative models toolbox.

- MMDeploy: OpenMMLab model deployment framework.

- MMEval: OpenMMLab machine learning evaluation library.