Davide Gerosa - davide.gerosa@unimib.it

University of Milano-Bicocca, 2024.

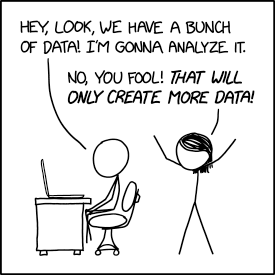

The use of statistics is ubiquitous in astronomy and astrophysics. Modern advances are made possible by the application of increasingly sophisticated tools, often dubbed "data mining", "machine learning", and "artificial intelligence". This class provides an introduction to (some of) these statistical techniques in a very practical fashion, pairing formal derivations with hands-on computational applications. Although examples will be taken almost exclusively from the realm of astronomy, this class is appropriate for all Physics students interested in machine learning.

- Introduction I. Data mining and machine learning. My research interests. Python setup. Version control with git. *

- Probability and Statistics I. Probability. Bayes' theorem. Random variables. *

- Probability and Statistics II. Monte Carlo integration. Descriptive statistics. Common distributions. *

- Probability and Statistics III. Central limit theorem. Multivariate pdfs. Correlation coefficients. Sampling from arbitrary pdfs. *

- Frequentist Statistical Inference: I. Frequentist vs Bayesian inference. Maximum likelihood estimation. Omoscedastic Gaussian data, Heteroscedastic Gaussian data, non Gaussian data. *

- Frequentist Statistical Inference: II. Maximum likelihood fit. Role of outliers. Goodness of fit. Model comparison. Gaussian mixtures. Boostrap and jackknife. *

- Frequentist Statistical Inference: III. Hypotesis testing. Comparing distributions, KS test. Histograms. Kernel density estimators. *

- Bayesian Statistical Inference: I. The Bayesian approach to statistics. Prior distributions. Credible regions. Parameter estimation examples (coin flip). Marginalization.

- Bayesian Statistical Inference: II Parameter estimation examples (Gaussian data, background). Model comparison: odds ratio. Approximate model comparison.

- Bayesian Statistical Inference: III. Monte Carlo methods. Markov chains. Burn-in. Metropolis-Hastings algorithm. *

- Bayesian Statistical Inference: IV. MCMC diagnostics. Traceplots. Autocorrelation lenght. Samplers in practice: emcee and PyMC3. Gibbs sampling. Conjugate priors. *

- Bayesian Statistical Inference: V. Evidence evaluation. Model selection. Savage-Dickey density ratio. Nested sampling. Samplers in practice: dynesty. *

- Introduction II. Data mining and machine learning. Supervised and unsupervised learning. Overview of scikit-learn. Examples. *

- Clustering. K-fold cross validation. Unsupervised clustering. K-Means Clustering. Mean-shift Clustering. Correlation functions. *

- Dimensional Reduction I. Curse of dimensionality. Principal component analysis. Missing data. Non-negative matrix factorization. Independent component analysis. *

- Dimensional Reduction II - Density estimation. Non-linear dimensional reduction. Locally linear embedding. Isometric mapping. t-SNE. Recap of density estimation. KDE. Nearest-Neighbor. Gaussian Mixtures. Pills of modern research

- Regression I. What is regression? Linear regression. Polynomial regression. Basis function regression. Kernel regression. Over/under fitting. Cross validation. Learning curves. *

- Regression II. Regularization. Ridge. LASSO. Non-linear regression. Gaussian process regression. Total least squares. *

- Classification I. Generative vs discriminative classification. Receiver Operating Characteristic (ROC) curve. Naive Bayes. Gaussian naive Bayes. Linear and quadratic discriminant analysis. GMM Bayes classification. K-nearest neighbor classifier. *

- Classification II. Logistic regression. Support vector machines. Decision trees. Bagging. Random forests. Boosting. *

- Deep learning I. Loss functions. Gradient descent, learning rate. Adaptive boosting. Neural networks. Backpropagation. Layers, neurons, activation functions, regularization schemes. *

- Deep learning II. TensorFlow, keras, and pytorch. Convolutional neural networks. Autoencoders. Generative adversarial networks. *

- Time series analysis I. Detect a variability. Fourier analysis. Temporally localized signals. Periodic signals. Lomb-Scargle periodogram. Multiband strategies. *

- Time series analysis II. Stochastic processes. Autoregressive models. Moving averages. Power-spectral density. Autocorrelation. White/red/pink noise. Unevenly sampled data.

* = Time to get your hands dirty!

Data mining and machine learning are computational subjects. One does not understand how to treat scientific data by reading equations on the blackboard: you will need to get your hands dirty (and this is the fun part!). Students are required to come to classes with a laptop or any device where you can code on (larger than a smartphone I would say...). Each class will pair theoretical explanations to hands-on exercises and demonstrations. These are the key content of the course, so please engage with them as much a possible.

At various points during the lectures you fill find some "Time to get your hands dirty" statements. That means you got to start coding!

Credits: Steve Taylor (Vanderbilt)

The main textbook we will be using is:

"Statistics, Data Mining, and Machine Learning in Astronomy", Željko, Andrew, Jacob, and Gray. Princeton University Press, 2012.

It's a wonderful book that I keep on referring to in my research. The library has a few copies; you can also download a digital version from the Bicocca library website. What I really like about that book is that they provide the code behind each single figure: astroml.org/book_figures. The best way to approach these topics is to study the introduction on the book, then grab the code and try to play with it. Make sure you get the updated edition of the book (that's the one with a black cover, not orange) because all the examples have been updated to python 3.

There are many other good resources in astrostatistics, here is a partial list. Some of them are free.

- "Statistical Data Analysis", Cowan. Oxford Science Publications, 1997.

- "Data Analysis: A Bayesian Tutorial", Sivia and Skilling. Oxford Science Publications, 2006.

- "Bayesian Data Analysis", Gelman, Carlin, Stern, Dunson, Vehtari, and Rubin. Chapman & Hall, 2013. Free!

- "Python Data Science Handbook", VanderPlas. O'Reilly Media, 2016. Free!

- "Practical Statistics for Astronomers", Wall and Jenkins. Cambridge University Press, 2003.

- "Bayesian Logical Data Analysis for the Physical Sciences", Gregory. Cambridge University Press, 2005.

- "Modern Statistical Methods For Astronomy" Feigelson and Babu. Cambridge University Press, 2012.

- "Information theory, inference, and learning algorithms" MacKay. Cambridge University Press, 2003. Free!

- “Data analysis recipes". These free are chapters of a book that is not yet finished by Hogg et al.

- "Choosing the binning for a histogram" [arXiv:0807.4820]

- "Fitting a model to data [arXiv:1008.4686]

- "Probability calculus for inference" [arXiv:1205.4446]

- "Using Markov Chain Monte Carlo" [arXiv:1710.06068]

- "Products of multivariate Gaussians in Bayesian inferences" [arXiv:2005.14199]

- "Practical Guidance for Bayesian Inference in Astronomy", Eadie et al., 2023.

- "Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow", Geron, O'Reilly Media, 2019.

- "Machine Learning for Physics and Astronomy", Acquaviva, Princeton University Press, 2023.

We will make heavy usage of the python programming language. If you need to refresh your python skills, here are some catch-up resources and online tutorials. A strong python programming background is essential in modern astrophysics!

- "Scientific Computing with Python", D. Gerosa. This is a class I teach for the PhD School here at Milano-Bicocca.

- "Lectures on scientific computing with Python", R. Johansson et al.

- Python Programming for Scientists", T. Robitaille et al.

- "Learning Scientific Programming with Python", Hill, Cambridge University Press, 2020. Supporting code: scipython.com.

The class covers 6 credits = 42 hours = 21 lectures of 2 hours each. Our weekly timeslots are Monday 8.30am-10.30am (sorry) and Thursday 10.30am-12.30pm. Note a few extra lectures on different days/times as well as a few weeks where we're going to skip classes). We're in room U2-05 on the main Bicocca campus.

- 04-03-24, 8.30am.

- 07-03-24, 10.30am.

- 11-03-24, 8.30am.

- 14-03-24, 10.30am.

- 18-03-24, Davide in a graduation committee

- 19-03-24, 8.30am. (Note different day!)

- 21-03-24, 10.30am.

- 25-03-24, 10.30am. (Note different time!)

- 28-03-24 Holiday

- 01-04-24 Holiday

- 04-04-24, Davide is away for research

- 08-04-24, 8.30am.

- 11-04-24, Davide is away for research

- 12-04-24, 8.30am. (Note different day!)

- 15-04-24, 8.30am.

- 18-04-24, Davide is away for research

- 22-04-24, 8.30 am.

- 25-04-24 Holiday

- 29-04-24, 8.30am.

- 30-04-24 10.30 (Note different day!)

- 02-05-24, 10.30am.

- 06-05-24, 8.30am.

- 09-05-24, 10.30am.

- 13-05-24, 8.30am.

- 16-05-24, 10.30am.

- 20-05-24, 8.30am.

- 23-05-24, 10.30am.

- 27-05-24, 8.30am.

- 30-05-24, 10.30am. Could add a lecture here if we need it.

This class draws heavily from many others that came before me. Credit goes to:

- Stephen Taylor (Vanderbilt University): github.com/VanderbiltAstronomy/astr_8070_s21.

- Gordon Richards (Drexel University): github.com/gtrichards/PHYS_440_540.

- Jake Vanderplas (University of Washington): github.com/jakevdp/ESAC-stats-2014.

- Zeljko Ivezic (University of Washington): github.com/uw-astr-302-w18/astr-302-w18.

- Andy Connolly (University of Washington): cadence.lsst.org/introAstroML/.

- Karen Leighly (University of Oklahoma): seminar.ouml.org/.

- Adam Miller (Northwestern University): github.com/LSSTC-DSFP/LSSTC-DSFP-Sessions/.

- Jo Bovy (University of Toronto): astro.utoronto.ca/~bovy/teaching.html.

- Nicolas Audebert (IGN Paris): github.com/nshaud/ml_for_astro

- Thomas Wiecki (PyMC Labs): twiecki.github.io/blog/2015/11/10/mcmc-sampling.

- Aurelienne Geron (freelancer): github.com/ageron/handson-ml2.

Credit: xkcd 2582