This repository contains the code and configuration files for setting up Karpenter on an existing Amazon EKS cluster, as described in the blog post Karpenter Setup on Existing EKS cluster: Optimizing Kubernetes Node Management.

- Introduction

- Brief Overview of Karpenter

- Key Differences from Cluster Autoscaler

- Prerequisites

- Setup Steps

- Testing the Setup

- Repository Contents

- Usage

- Contributing

- License

- Acknowledgments

- References and Resources

This guide walks you through the process of setting up Karpenter on an existing Amazon EKS cluster. Karpenter is an open-source node provisioning project that automatically launches just the right compute resources to handle your Kubernetes cluster's applications.

Karpenter is designed to improve the efficiency and cost of running workloads on Kubernetes by:

- Just-in-Time Provisioning: Rapidly launching nodes in response to pending pods, often in seconds.

- Bin-Packing: Efficiently packing pods onto nodes to maximize resource utilization.

- Diverse Instance Types: Provisioning a wide range of instance types, including spot instances, to optimize for cost and performance.

- Custom Resource Provisioning: Allowing fine-grained control over node provisioning based on pod requirements.

- Automatic Node Termination: Removing nodes that are no longer needed, helping to reduce costs.

- Karpenter doesn't rely on node groups, offering more flexibility in instance selection.

- It can provision nodes faster and more efficiently, reducing scheduling latency.

- Karpenter integrates directly with the AWS APIs, allowing for more efficient cloud resource management.

- For more details, see this comparison.

- AWS CLI and kubectl installed and configured

- Helm installed

- Terraform - for IAM role and policy setup

- Existing EKS cluster setup

- Existing VPC and subnets

- Existing security groups

- Nodes in one or more node groups

- OIDC provider for service accounts in your cluster

- Set Environment Variables

- Create OpenID Connect (OIDC) provider

- Set Up IAM Roles and Policies

- Tag Subnets and Security Groups

- Update aws-auth ConfigMap

- Deploy Karpenter

- Create NodePool

Define cluster-specific information and AWS account details that will be used throughout the migration process.

KARPENTER_NAMESPACE=kube-system

CLUSTER_NAME=<your cluster name>

AWS_PARTITION="aws"

AWS_REGION="$(aws configure list | grep region | tr -s " " | cut -d" " -f3)"

OIDC_ENDPOINT="$(aws eks describe-cluster --name "${CLUSTER_NAME}" \

--query "cluster.identity.oidc.issuer" --output text)"

AWS_ACCOUNT_ID=$(aws sts get-caller-identity --query 'Account' \

--output text)

K8S_VERSION=1.28

ARM_AMI_ID="$(aws ssm get-parameter --name /aws/service/eks/optimized-ami/${K8S_VERSION}/amazon-linux-2-arm64/recommended/image_id --query Parameter.Value --output text)"

AMD_AMI_ID="$(aws ssm get-parameter --name /aws/service/eks/optimized-ami/${K8S_VERSION}/amazon-linux-2/recommended/image_id --query Parameter.Value --output text)"

GPU_AMI_ID="$(aws ssm get-parameter --name /aws/service/eks/optimized-ami/${K8S_VERSION}/amazon-linux-2-gpu/recommended/image_id --query Parameter.Value --output text)"Make sure to replace <your cluster name> with your actual EKS cluster name.

Check if you have an existing IAM OIDC provider for your cluster

Create an IAM OIDC provider for your cluster

Set up the necessary permissions for Karpenter to interact with AWS services. This includes a role for Karpenter-provisioned nodes and a role for the Karpenter controller itself.

In this step, Terraform is used to create the necessary IAM roles and policies for Karpenter to function properly with your EKS cluster setup. Specifically, it's creating:

-

A Karpenter Node IAM Role: This role is assumed by the EC2 instances that Karpenter creates, allowing them to join the EKS cluster and access necessary AWS resources.

-

A Karpenter Controller IAM Role: An IAM role that the Karpenter controller will use to provision new instances. The controller will be using IAM Roles for Service Accounts (IRSA) which requires an OIDC endpoint.

The Terraform configuration uses the variables defined in the variables.tf file (such as aws_region, cluster_name, karpenter_namespace, and k8s_version) to customize the IAM resources for your specific EKS cluster setup.

By using Terraform for this step, you ensure that all the necessary IAM resources are created consistently and can be easily managed as infrastructure as code.

terraform init

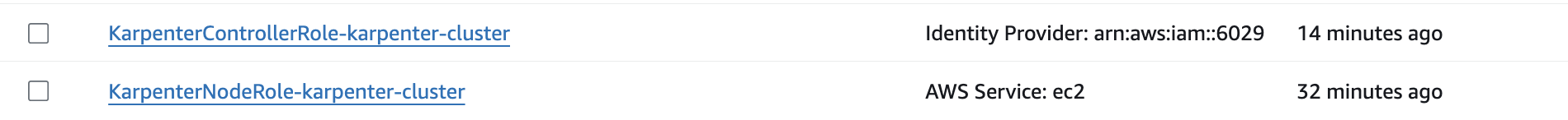

terraform applyFrom the terrrafrom script, you will create 2 IAM Roles:

After applying the Terraform configuration, tag the subnets and security groups to enable Karpenter to discover and use the appropriate subnets and security groups when provisioning new nodes.

Use the following AWS CLI commands to tag the relevant resources:

for NODEGROUP in $(aws eks list-nodegroups --cluster-name "${CLUSTER_NAME}" --query 'nodegroups' --output text); do

aws ec2 create-tags \

--tags "Key=karpenter.sh/discovery,Value=${CLUSTER_NAME}" \

--resources $(aws eks describe-nodegroup --cluster-name "${CLUSTER_NAME}" \

--nodegroup-name "${NODEGROUP}" --query 'nodegroup.subnets' --output text )

done

NODEGROUP=$(aws eks list-nodegroups --cluster-name "${CLUSTER_NAME}" \

--query 'nodegroups[0]' --output text)

LAUNCH_TEMPLATE=$(aws eks describe-nodegroup --cluster-name "${CLUSTER_NAME}" \

--nodegroup-name "${NODEGROUP}" --query 'nodegroup.launchTemplate.{id:id,version:version}' \

--output text | tr -s "\t" ",")

# For EKS setups using only Cluster security group:

SECURITY_GROUPS=$(aws eks describe-cluster \

--name "${CLUSTER_NAME}" --query "cluster.resourcesVpcConfig.clusterSecurityGroupId" --output text)

# For setups using security groups in the Launch template of a managed node group:

SECURITY_GROUPS="$(aws ec2 describe-launch-template-versions \

--launch-template-id "${LAUNCH_TEMPLATE%,*}" --versions "${LAUNCH_TEMPLATE#*,}" \

--query 'LaunchTemplateVersions[0].LaunchTemplateData.[NetworkInterfaces[0].Groups||SecurityGroupIds]' \

--output text)"

aws ec2 create-tags \

--tags "Key=karpenter.sh/discovery,Value=${CLUSTER_NAME}" \

--resources "${SECURITY_GROUPS}"Update aws-auth ConfigMap to allow nodes created by Karpenter to join the EKS cluster by granting the necessary permissions to the Karpenter node IAM role.

kubectl edit configmap aws-auth -n kube-systemAdd the following section to the mapRoles:

- groups:

- system:bootstrappers

- system:nodes

rolearn: arn:${AWS_PARTITION}:iam::${AWS_ACCOUNT_ID}:role/KarpenterNodeRole-${CLUSTER_NAME}

username: system:node:{{EC2PrivateDNSName}}Replace ${AWS_PARTITION}, ${AWS_ACCOUNT_ID}, and ${CLUSTER_NAME} with your actual values.

Install the Karpenter controller and its associated resources in the cluster, configuring it to work with your specific EKS setup.

export KARPENTER_VERSION="1.0.1"

helm template karpenter oci://public.ecr.aws/karpenter/karpenter --version "${KARPENTER_VERSION}" --namespace "${KARPENTER_NAMESPACE}" \

--set "settings.clusterName=${CLUSTER_NAME}" \

--set "serviceAccount.annotations.eks\.amazonaws\.com/role-arn=arn:${AWS_PARTITION}:iam::${AWS_ACCOUNT_ID}:role/KarpenterControllerRole-${CLUSTER_NAME}" \

--set controller.resources.requests.cpu=1 \

--set controller.resources.requests.memory=1Gi \

--set controller.resources.limits.cpu=1 \

--set controller.resources.limits.memory=1Gi > karpenter.yamlEnsure Karpenter runs on existing node group nodes, maintaining high availability and preventing it from scheduling itself on nodes it manages.

Edit the karpenter.yaml file to set node affinity:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: karpenter.sh/nodepool

operator: DoesNotExist

- key: eks.amazonaws.com/nodegroup

operator: In

values:

- ${NODEGROUP}

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- topologyKey: "kubernetes.io/hostname"Replace ${NODEGROUP} with your actual node group name.

kubectl create namespace "${KARPENTER_NAMESPACE}" || true

kubectl create -f \

"https://raw.githubusercontent.com/aws/karpenter-provider-aws/v${KARPENTER_VERSION}/pkg/apis/crds/karpenter.sh_nodepools.yaml"

kubectl create -f \

"https://raw.githubusercontent.com/aws/karpenter-provider-aws/v${KARPENTER_VERSION}/pkg/apis/crds/karpenter.k8s.aws_ec2nodeclasses.yaml"

kubectl create -f \

"https://raw.githubusercontent.com/aws/karpenter-provider-aws/v${KARPENTER_VERSION}/pkg/apis/crds/karpenter.sh_nodeclaims.yaml"

kubectl apply -f karpenter.yamlDefine the default configuration for nodes that Karpenter will provision, including instance types, capacity type, and other constraints.

Create a nodepool.yaml file with your desired configuration and apply it:

apiVersion: karpenter.sh/v1beta1

kind: NodePool

metadata:

name: default #can change

spec:

template:

spec:

requirements:

- key: kubernetes.io/arch

operator: In

values: ["amd64", "arm64"]

- key: kubernetes.io/os

operator: In

values: ["linux"]

- key: karpenter.sh/capacity-type

operator: In

values: ["spot"] #on-demand #prioritizes spot

- key: karpenter.k8s.aws/instance-category

operator: In

values: ["c", "m", "r"]

- key: karpenter.k8s.aws/instance-generation

operator: Gt

values: ["2"]

nodeClassRef:

apiVersion: karpenter.k8s.aws/v1beta1

kind: EC2NodeClass

name: default

limits:

cpu: 1000

# disruption:

# consolidationPolicy: WhenUnderutilized

# expireAfter: 720h # 30 * 24h = 720h

disruption:

consolidationPolicy: WhenEmpty

consolidateAfter: 30s

---

apiVersion: karpenter.k8s.aws/v1beta1

kind: EC2NodeClass

metadata:

name: default

spec:

amiFamily: AL2 # Amazon Linux 2

role: "KarpenterNodeRole-karpenter-cluster" # replace with your cluster name

subnetSelectorTerms:

- tags:

karpenter.sh/discovery: karpenter-cluster # replace with your cluster name

securityGroupSelectorTerms:

- tags:

karpenter.sh/discovery: karpenter-cluster # replace with your cluster name

amiSelectorTerms:

- id: ami-0d1d7c8c32a476XXX

- id: ami-0f91781eb6d40dXXX

- id: ami-058b426ded9842XXX # <- GPU Optimized AMD AMI

# - name: "amazon-eks-node-${K8S_VERSION}-*" # <- automatically upgrade when a new AL2 EKS Optimized AMI is released. This is unsafe for production workloads. Validate AMIs in lower environments before deploying them to production.kubectl apply -f nodepool.yamlDeploy a sample application to test Karpenter's autoscaling capabilities:

Create a file named inflate.yaml with the following content:

apiVersion: apps/v1

kind: Deployment

metadata:

name: inflate

spec:

replicas: 4

selector:

matchLabels:

app: inflate

template:

metadata:

labels:

app: inflate

spec:

terminationGracePeriodSeconds: 0

securityContext:

runAsUser: 1000

runAsGroup: 3000

fsGroup: 2000

containers:

- name: inflate

image: public.ecr.aws/eks-distro/kubernetes/pause:3.7

resources:

requests:

# cpu: 100m

cpu: 1

securityContext:

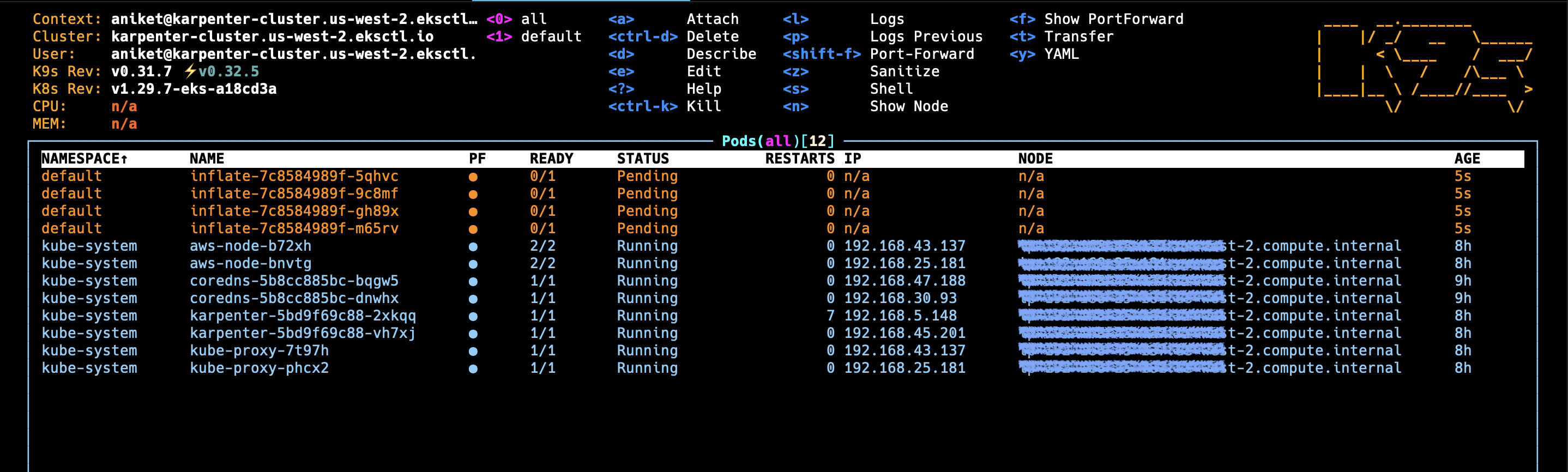

allowPrivilegeEscalation: falseThis deployment creates 4 replicas of a pod that requests 1 CPU core each. The pause container is a minimal container that does nothing but sleep, making it perfect for testing resource allocation without actually consuming resources.

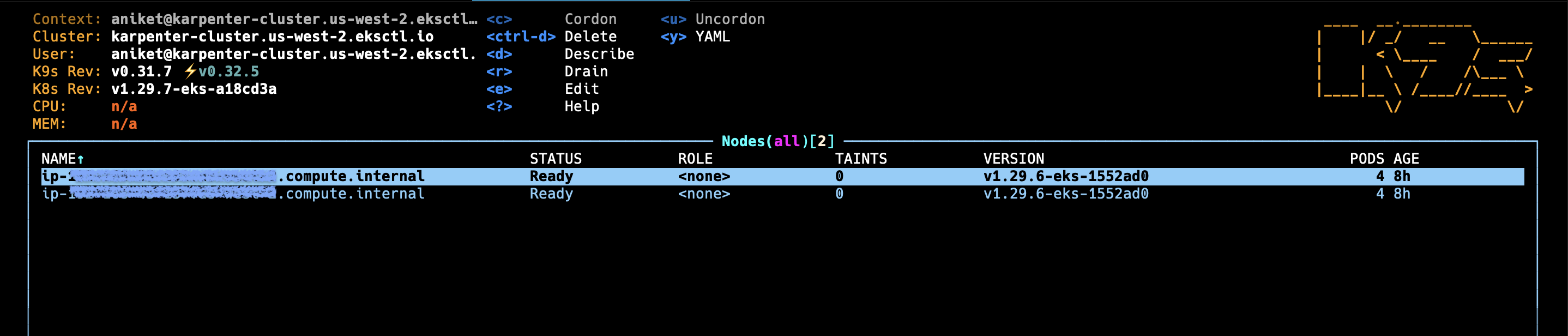

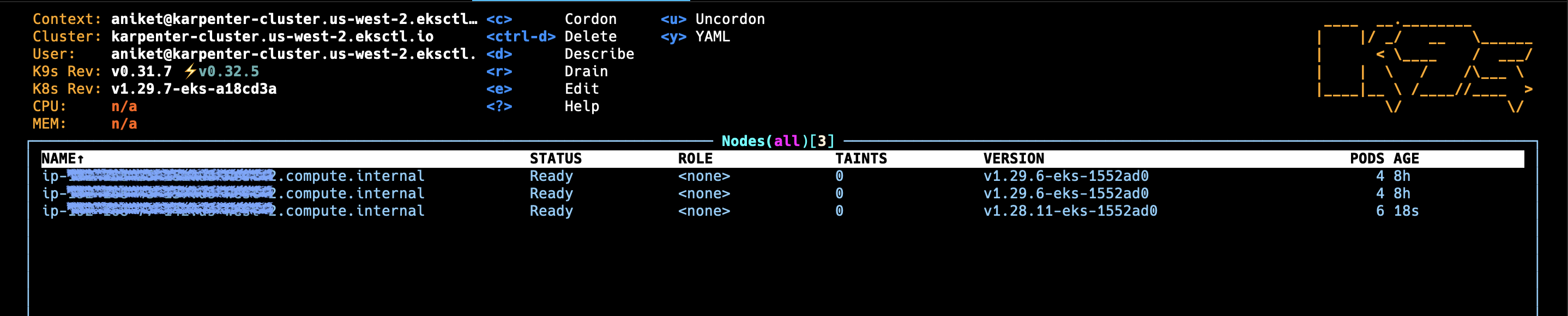

▪︎ Initially we had 2 Nodes:

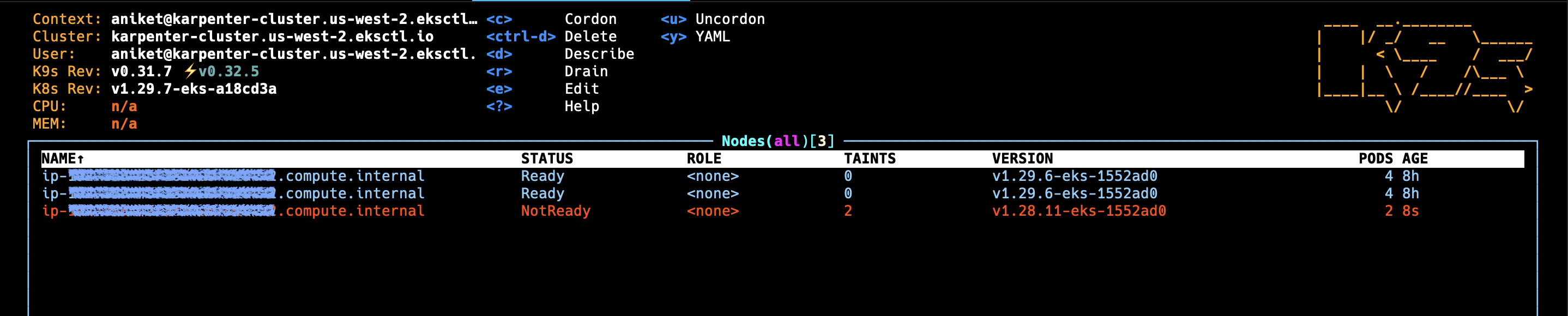

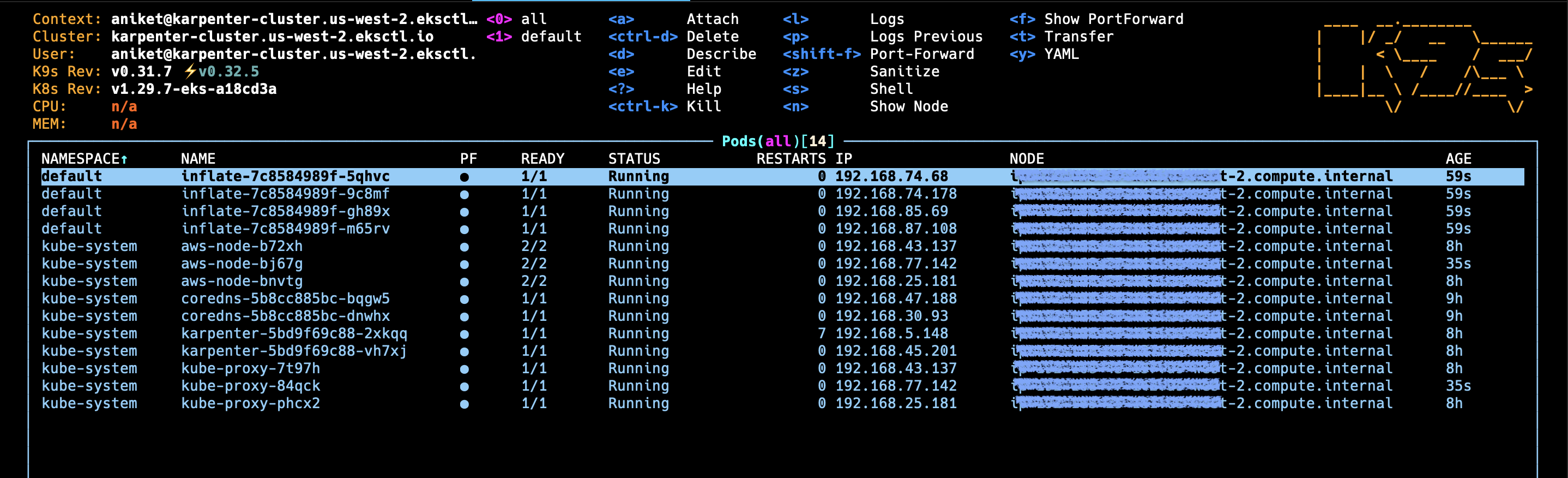

kubectl apply -f inflate.yaml▪︎ After deploying inflate application:

If Karpenter is working correctly, you should see new nodes being added to your cluster to accommodate the inflate pods. This demonstrates Karpenter's ability to automatically scale your cluster based on resource demands.

▪︎ Delete the Deployment

kubectl delete -f inflate.yamlAnd you will see the node which was created will be deleted.

terraform/: Directory containing Terraform configurationsmain.tf: Main Terraform configurationvariables.tf: Terraform variablesoutputs.tf: Terraform outputsversions.tf: Terraform version constraintsnode_role.tf: IAM role for Karpenter nodescontroller_role.tf: IAM role for Karpenter controller

karpenter.yaml: Karpenter Helm chart valuesnodepool.yaml: Karpenter NodePool and EC2NodeClass configurationinflate.yaml: Sample application for testing Karpenter

- Clone this repository

- Set up your AWS credentials

- Modify the Terraform variables in

terraform/variables.tfas needed - Follow the steps in the blog post to deploy Karpenter

Contributions are welcome! Please feel free to submit a Pull Request.

This project is licensed under the MIT License - see the LICENSE file for details.

- Karpenter Migration Guide

- EKS OIDC Troubleshooting

- EKS Blueprints Karpenter Pattern

- Karpenter Best Practices

For more detailed instructions, please refer to the full blog post.