This repository is my bachelor graduation project, and it is also a study of TensorFlow, Deep Learning (CNN, RNN, etc.).

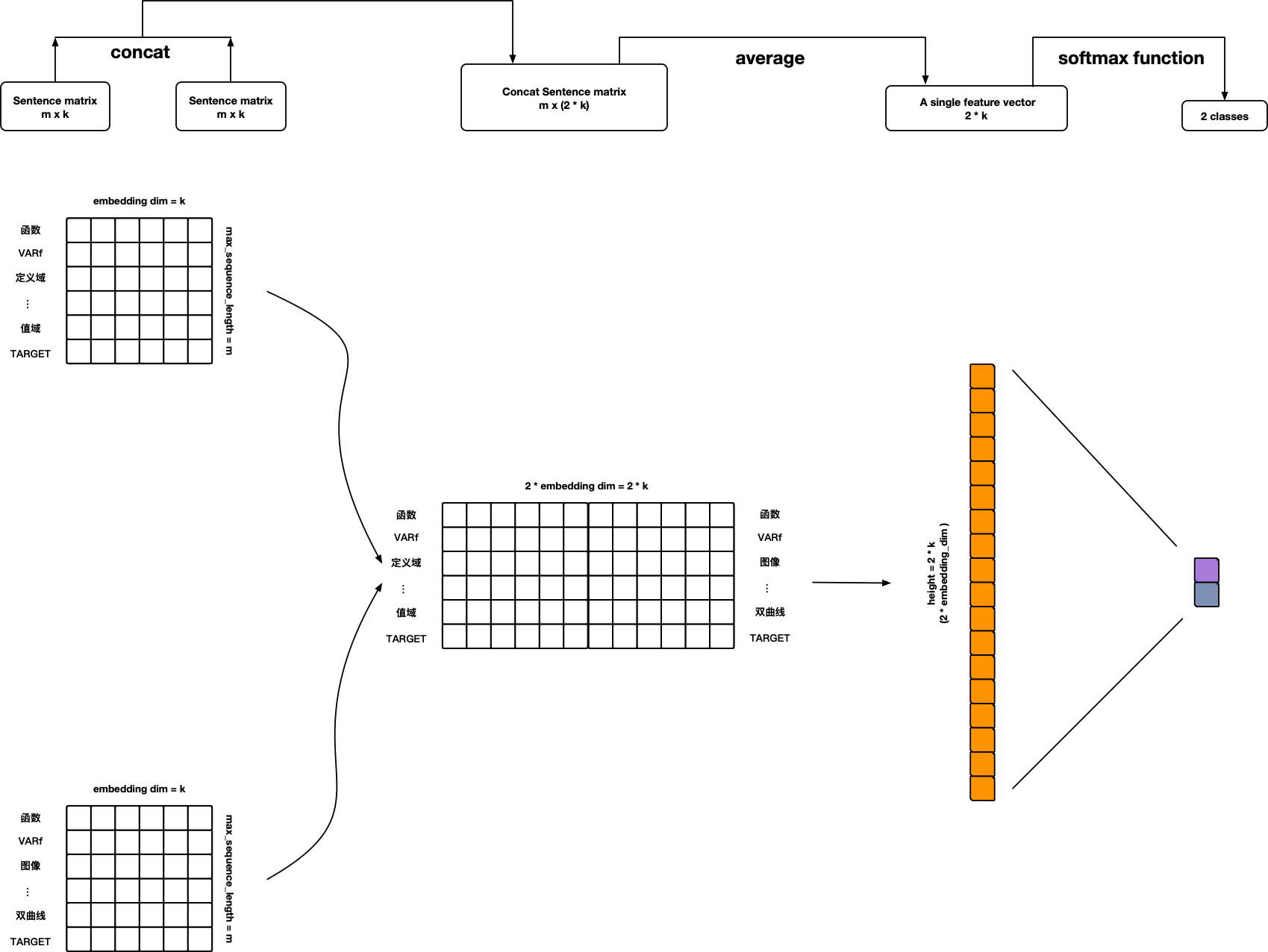

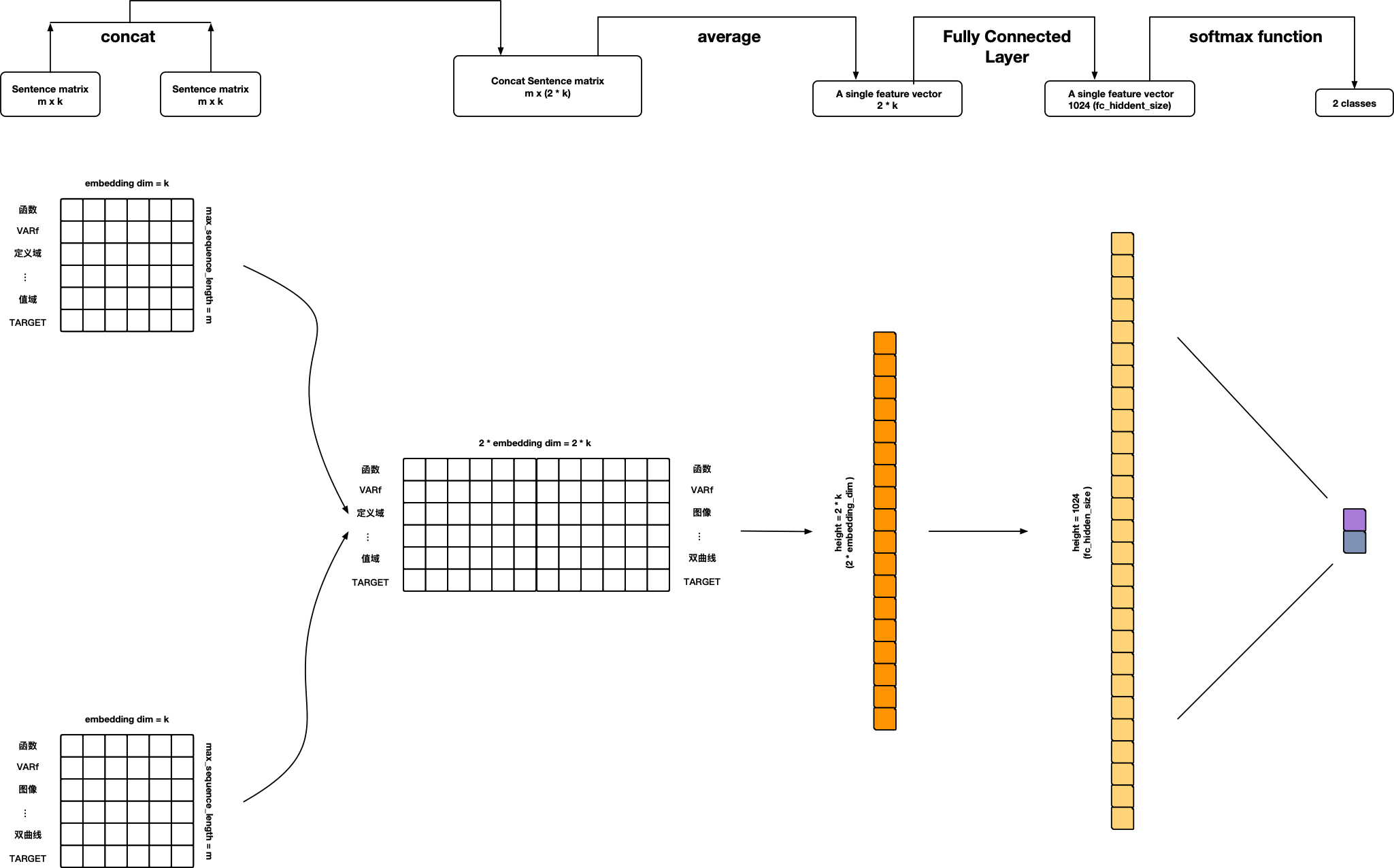

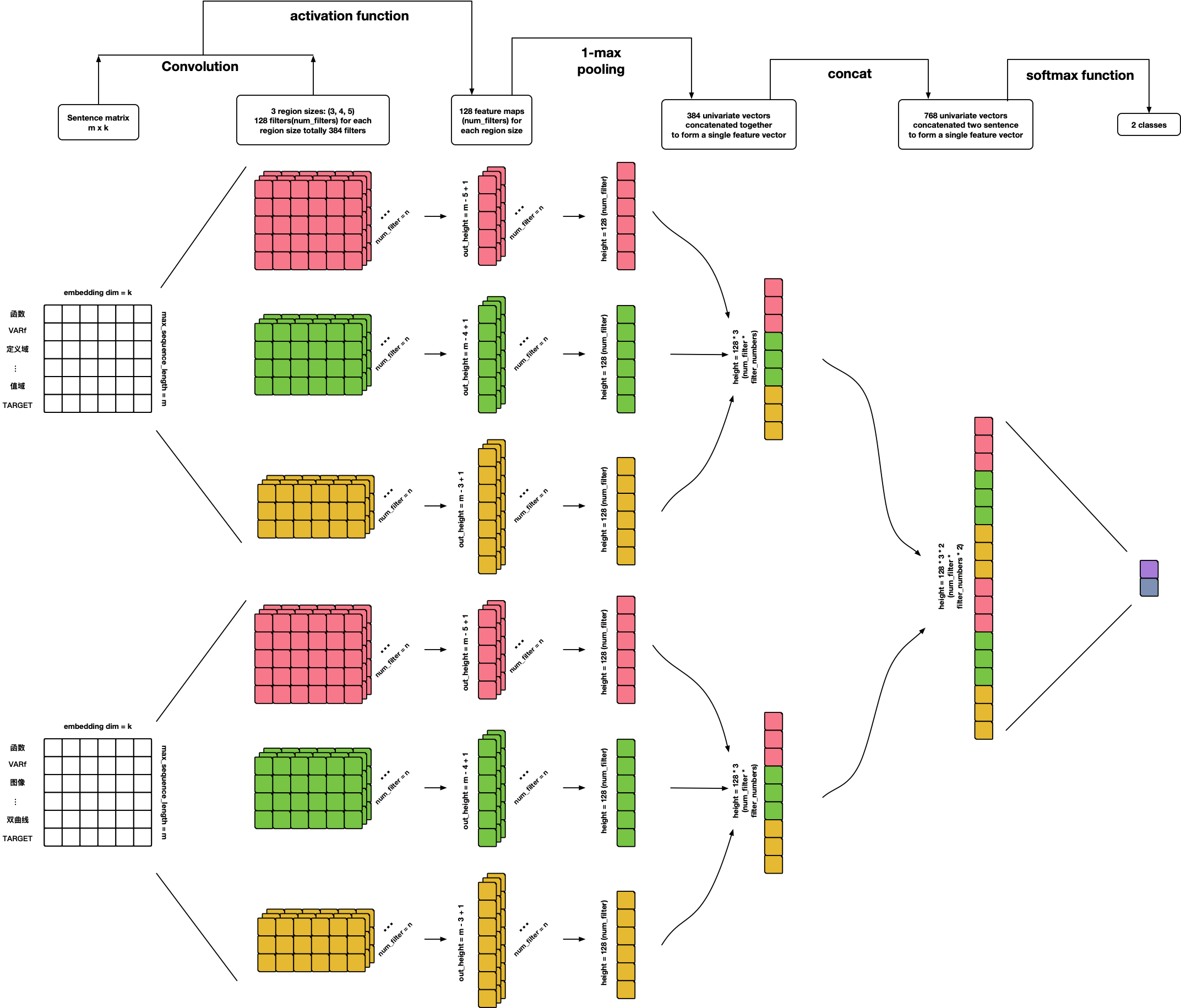

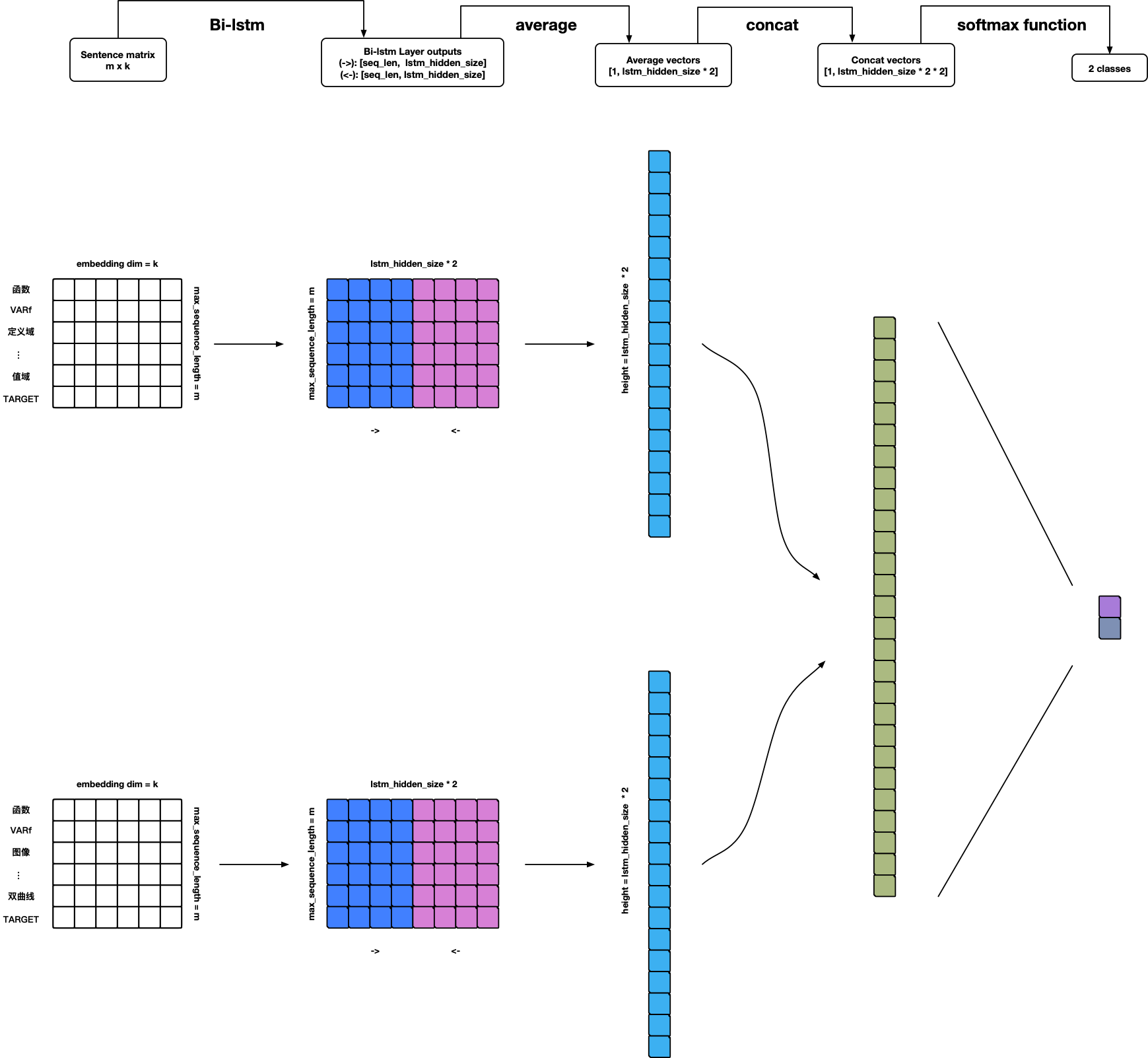

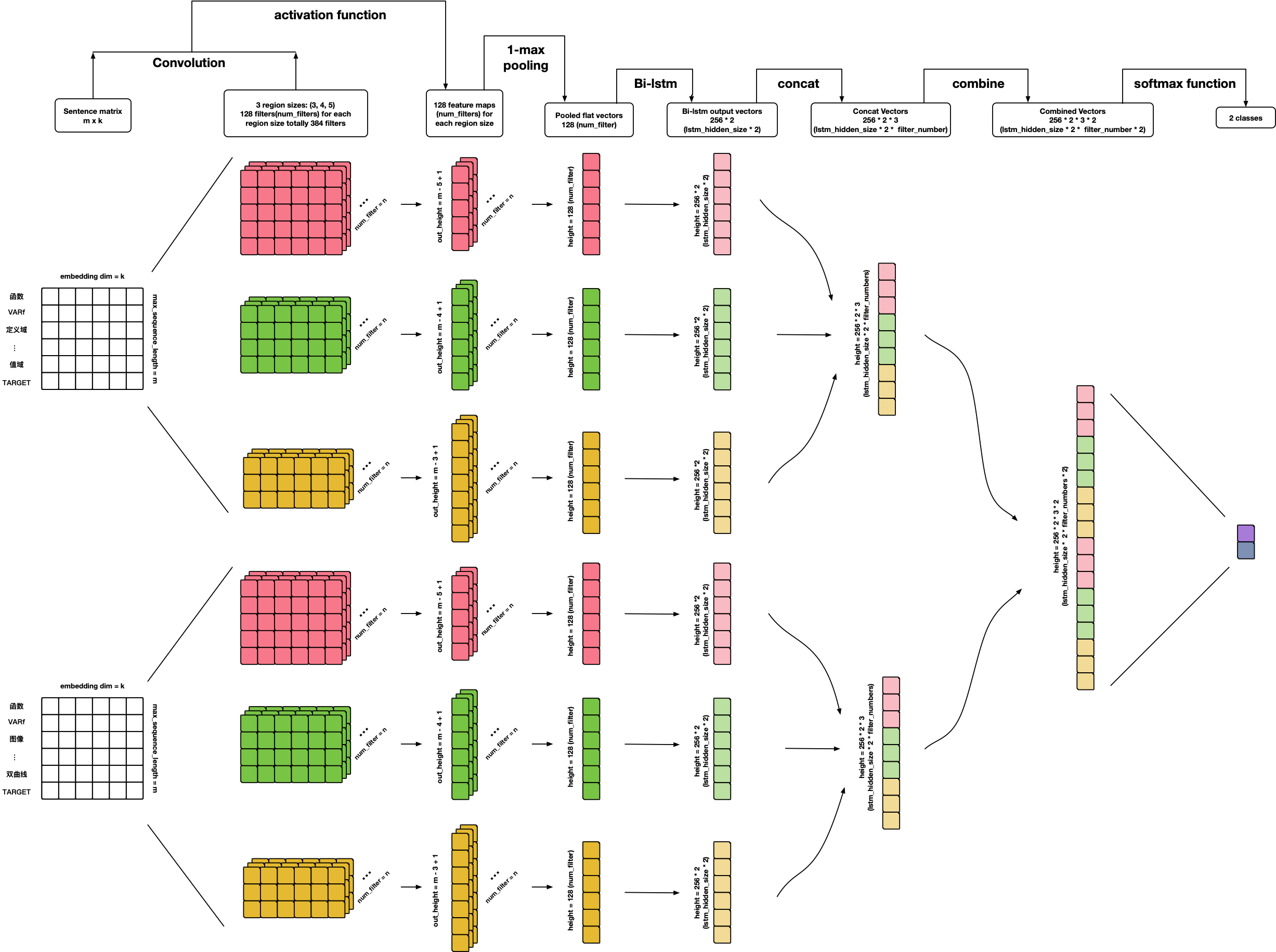

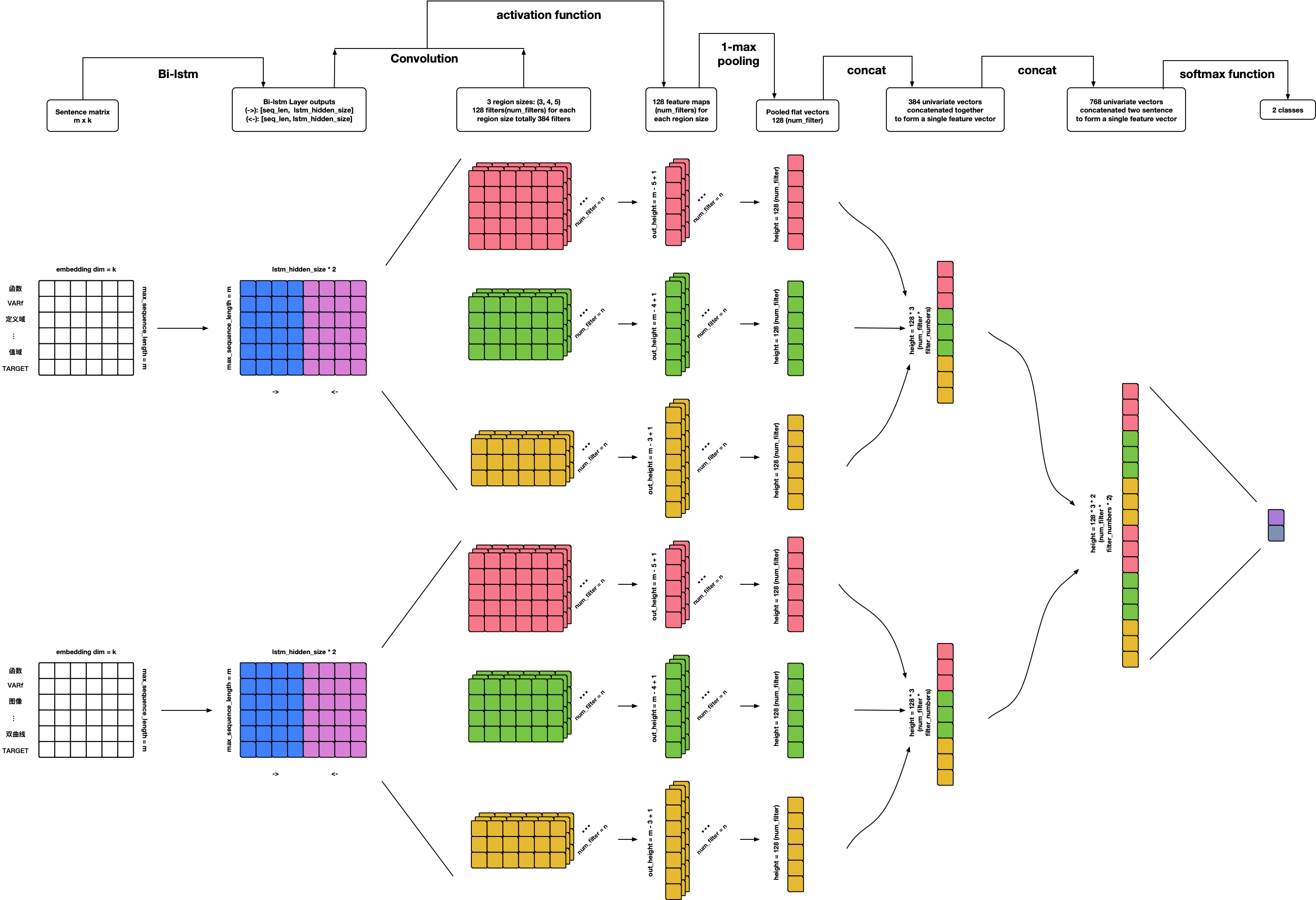

The main objective of the project is to determine whether the two sentences are similar in sentence meaning (binary classification problems) by the two given sentences based on Neural Networks (Fasttext, CNN, LSTM, etc.).

- Python 3.6

- Tensorflow 1.15.0

- Tensorboard 1.15.0

- Sklearn 0.19.1

- Numpy 1.16.2

- Gensim 3.8.3

- Tqdm 4.49.0

The project structure is below:

.

├── Model

│ ├── test_model.py

│ ├── text_model.py

│ └── train_model.py

├── data

│ ├── word2vec_100.model.* [Need Download]

│ ├── Test_sample.json

│ ├── Train_sample.json

│ └── Validation_sample.json

└── utils

│ ├── checkmate.py

│ ├── data_helpers.py

│ └── param_parser.py

├── LICENSE

├── README.md

└── requirements.txt

- Make the data support Chinese and English (Can use

jiebaornltk). - Can use your pre-trained word vectors (Can use

gensim). - Add embedding visualization based on the tensorboard (Need to create

metadata.tsvfirst).

- Add the correct L2 loss calculation operation.

- Add gradients clip operation to prevent gradient explosion.

- Add learning rate decay with exponential decay.

- Add a new Highway Layer (Which is useful according to the model performance).

- Add Batch Normalization Layer.

- Add several performance measures (especially the AUC) since the data is imbalanced.

- Can choose to train the model directly or restore the model from the checkpoint in

train.py. - Can create the prediction file which including the predicted values and predicted labels of the Testset data in

test.py. - Add other useful data preprocess functions in

data_helpers.py. - Use

loggingfor helping to record the whole info (including parameters display, model training info, etc.). - Provide the ability to save the best n checkpoints in

checkmate.py, whereas thetf.train.Savercan only save the last n checkpoints.

See data format in /data folder which including the data sample files. For example:

{"front_testid": "4270954", "behind_testid": "7075962", "front_features": ["invention", "inorganic", "fiber", "based", "calcium", "sulfate", "dihydrate", "calcium"], "behind_features": ["vcsel", "structure", "thermal", "management", "structure", "designed"], "label": 0}- "testid": just the id.

- "features": the word segment (after removing the stopwords)

- "label": 0 or 1. 1 means that two sentences are similar, and 0 means the opposite.

-

You can use

nltkpackage if you are going to deal with the English text data. -

You can use

jiebapackage if you are going to deal with the Chinese text data.

This repository can be used in other datasets (text pairs similarity classification) in two ways:

- Modify your datasets into the same format of the sample.

- Modify the data preprocessing code in

data_helpers.py.

Anyway, it should depend on what your data and task are.

You can download the Word2vec model file (dim=100). Make sure they are unzipped and under the /data folder.

You can pre-training your word vectors (based on your corpus) in many ways:

- Use

gensimpackage to pre-train data. - Use

glovetools to pre-train data. - Even can use a fasttext network to pre-train data.

🤔Before you open the new issue, please check the data sample file under the data folder and read the other open issues first, because someone maybe ask the same question already.

See Usage.

References:

References:

- Personal ideas 🙃

References:

- Convolutional Neural Networks for Sentence Classification

- A Sensitivity Analysis of (and Practitioners' Guide to) Convolutional Neural Networks for Sentence Classification

Warning: Model can use but not finished yet 🤪!

- Add BN-LSTM cell unit.

- Add attention.

References:

References:

- Personal ideas 🙃

References:

- Personal ideas 🙃

References:

Warning: Model can use but not finished yet 🤪!

- Add attention penalization loss.

- Add visualization.

References:

Warning: Only achieve the ABCNN-1 Model🤪!

- Add ABCNN-3 model.

References:

黄威,Randolph

SCU SE Bachelor; USTC CS Ph.D.

Email: chinawolfman@hotmail.com

My Blog: randolph.pro

LinkedIn: randolph's linkedin