Experiment tracking for fastai-trained models.

- Log, organize, visualize, and compare ML experiments in a single place

- Monitor model training live

- Version and query production-ready models, and associated metadata (e.g. datasets)

- Collaborate with the team and across the organization

- Hyperparameters

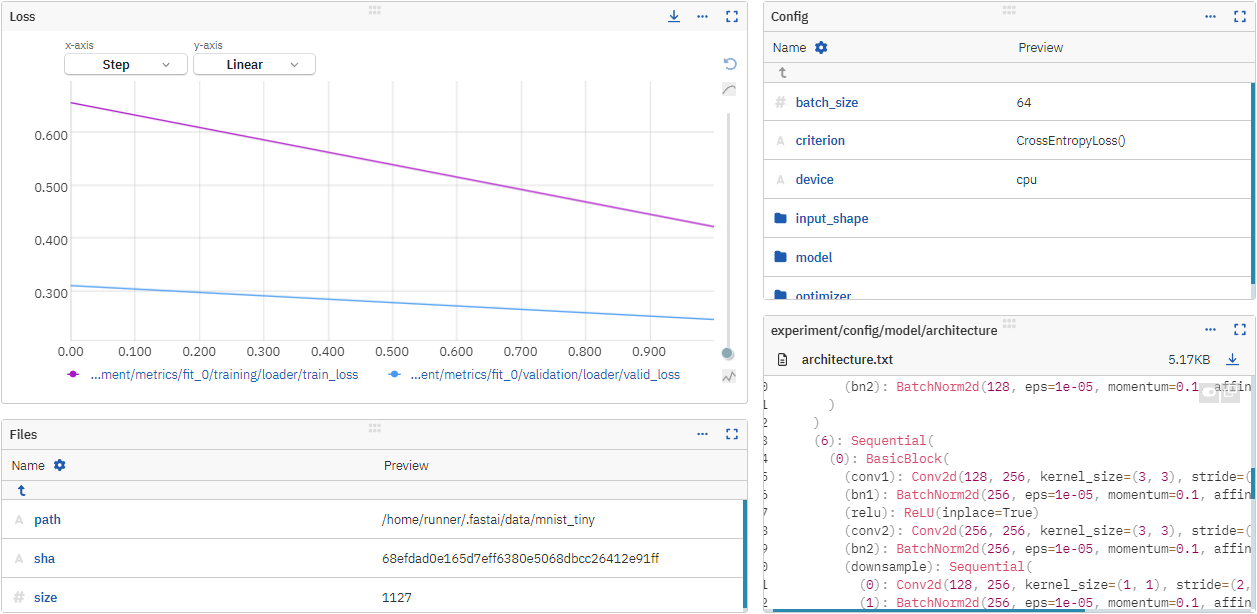

- Losses and metrics

- Training code (Python scripts or Jupyter notebooks) and Git information

- Dataset version

- Model configuration, architecture, and weights

- Other metadata

- Documentation

- Code example on GitHub

- Example dashboard in the Neptune app

- Run example in Google Colab

On the command line:

pip install neptune-fastai

In Python:

import neptune

# Start a run

run = neptune.init_run(

project="common/fastai-integration",

api_token=neptune.ANONYMOUS_API_TOKEN,

)

# Log a single training phase

learn = learner(...)

learn.fit(..., cbs = NeptuneCallback(run=run))

# Log all training phases of the learner

learn = cnn_learner(..., cbs=NeptuneCallback(run=run))

learn.fit(...)

learn.fit(...)

# Stop the run

run.stop()If you got stuck or simply want to talk to us, here are your options:

- Check our FAQ page

- You can submit bug reports, feature requests, or contributions directly to the repository.

- Chat! When in the Neptune application click on the blue message icon in the bottom-right corner and send a message. A real person will talk to you ASAP (typically very ASAP),

- You can just shoot us an email at support@neptune.ai