Data Warehouse for Song Play Analysis of the Sparkify dataset.

Data is gathered from 2 separate sets, and is loaded into an optimized Star Schema that allows to run queries quickly and without the need to perform multiple joins.

Index:

- Files and Requirements

- Schema

- How to Run

sql_queries.py: Python file with all SQL queries required to create staging tables and database star schema, Copy data to staging table and inser values to each dimension and fact table.create_tables.py: Python file that connects to redhift cluster and runs "CREATE TABLE" queries.etl.py: Python file that connects to redhift cluster and runs the "COPY" data into staging area query and also runs the insert queries to the dimension and facts table.dwh.cfg: Configuration file to store parameters between other files.awsUser.cfgFile with AWS KEY and SECRET KEY, file not included in the repo.redshift_cluster.ipynbJupyter Notebook for creating AWS Redshift Cluster.

- python3

- boto3

- json

- configparser

- psycopg2

- Jupyter Notebooks

Notes:

- AWS Account:

- DWH_CLUSTER_TYPE='multi-node'

- DWH_NUM_NODES='4'

- DWH_NODE_TYPE='dc2.large'

- DWH_PORT='5439'

- DWH_REGION='us-west-2'

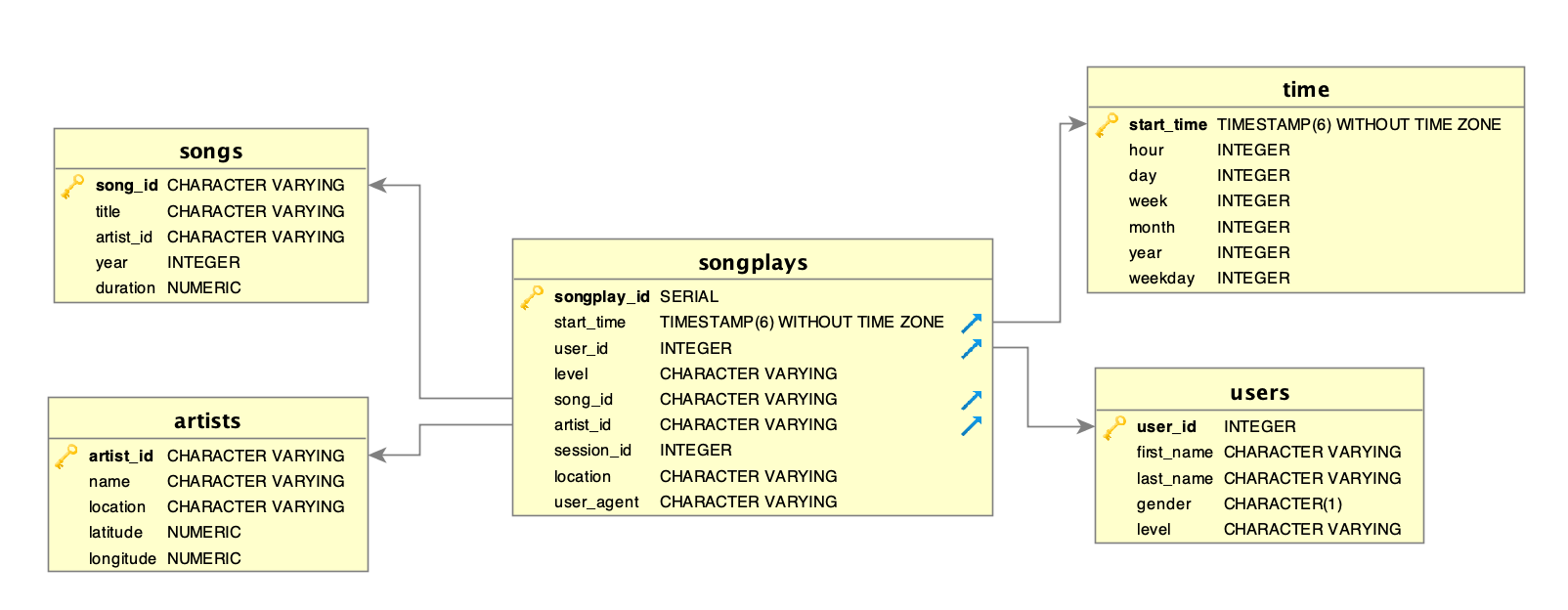

The star schema is the simplest style of data mart schema and is the approach most widely used to develop data warehouses and dimensional data marts. The star schema consists of one or more fact tables referencing any number of dimension tables. The star schema is an important special case of the snowflake schema, and is more effective for handling simpler queries

Source: Wikipedia

- songplays - records in log data associated with song plays i.e. records with page NextSong.

- songplay_id, start_time, user_id, level, song_id, artist_id, session_id, location, user_agent.

- users - users in the app.

- user_id, first_name, last_name, gender, level

- songs - songs in music database.

- song_id, title, artist_id, year, duration

- artists - artists in music database

- artist_id, name, location, latitude, longitude.

- time - timestamps of records in songplays broken down into specific units.

- start_time, hour, day, week, month, year, weekday.

With you AWS Account:

- Create a Redshift Cluster with the parameters noted in 1.4.

- Create IAM client to connect to the cluster

Once you hace created your Redshift Cluster, is necesary to get the Endpoint address and IAM client ARN, and save this in the

dwh.cfgfile.

Open a terminal execute the following commands: $: python create_tables.py, this will create the tables, if no errors occur, got to nex step.$: python etl.py, this will load the data into the stagin area and then populate the star schema.

At this point you should be able to run queries on the data.