-

Notifications

You must be signed in to change notification settings - Fork 0

Docker

- Very short Docker Overview

- Creating a Docker Image

- Build a Docker Image

- Run a Container

- Data Storage

- More on Docker

- Condor integration

Docker containers are similar to lightweight virtual machines, but have a different architecture and are organized in layers.

For our purposes here we care about them to be lightweight and to provide the user with a virtually isolated environment that includes the application and all its dependencies. Working with Docker containers also enable portability between systems and allow us to provide users with an additional service, in case they don't wish to use Agave applications.

Biocontainers offers a long list of bioinformatics containerized applications you may need.

To create a Docker Image you will need to download and install Docker for your distribution. The suggestion is to follow the tutorial available on the website to get started.

The instructions to build a Docker image are written in a DockerFile.

For CyVerseUK Docker images we start with a Linux distribution (FROM command), ideally the suitable one that provides the smallest base image (though considerations about the number of dependencies and their availability on different systems may led to the conclusion that is convenient to use one of the ubuntu distributions). For CyVerseUK images the convention is to specify the tag of the base image too (more about tags below), to provide the user with a completely standardised container.

The LABEL field provides the image metadata, in CyVerseUK software/package.version (note that we are not currently respecting the guideline of prefixing each label key with the reverse DNS notation of the CyVerse domain). A list of labels and additional informations can be retrieved with docker inspect <image_name>.

The USER will be root by default for CyVerseUK Docker images.

The RUN instruction executes the following commands installing the requested software and its dependencies. As suggested by the official Docker documentation, the best practice is to write all the commands in the same RUN instruction (this is also true for any other instruction) separated by &&, to minimise the number of layers. Note that the building process is NOT interactive and the user will not be able to answer the prompt, so use -y or -yy to run apt-get update and apt-get install. It is also possible to set ARG DEBIAN_FRONTEND=noninteractive to disable the prompt (ARG instruction set a variable just at build time).

The WORKDIR instruction sets the working directory (/data/ for my images).

If needed the following instructions may be also present:

ADD/COPY to add file/data/software to the image. Ideally the source will be a link or a repository publicly available. The difference between the two instructions is that the former can extract files and open URLs (so in CyVerseUK will be preferred: however ADD DO NOT extract from an URL, the extraction will have to be explicitly performed in a second time). Also may worth to note that the official documentation now recommends, when possible, to avoid ADD and use wget or curl.

ENV set environmental variables. Note that it supports a few standard bash modifiers as the following:

${variable:-word}

${variable:+word}MANTAINER is the author of the image. Note that in the meanwhile MAINTAINER have been deprecated, so from now on it will be listed as a key-value pair in LABEL.

ENTRYPOINT may provide a default command to run when starting a new container making the docker image an executable. (It's possible though to override the ENTRYPOINT instruction at run time with the --entrypoint flag).

The easier way to build a Docker image once written the Dockerfile is to run the following command:

docker build -t image_name[:tag] path/to/Dockerfile

Each image can be provided at build time with a tag (default one is latest). (it's a good idea to have one Dockerfile per folder, hence you can run the previous command in .).

Please always provide a tag if you wish to use the container to run an app on the CyVerse system. Pulling :latest doesn't assure to have the most update app on the system, and hide some possibly important information to the final user/poor debugger person.

To make an image publicly available this needs to be uploaded in DockerHub (or some other registry, you may want to collaborate to Biocontainers, if your image adhere with their guidelines). You will have to create an account for yourself/your organization and follow the official documentation. To summarize use the following command:

docker tag <image_ID> <DockerHub_username/image_name[:tag]>

<image_ID> can be easily determined with docker images. Note that <DockerHub_username/image_name> needs to be manually created in DockerHub prior to the above command to be run. (this is not true anymore)

After this you need to push the image:

docker push <DockerHub_username/image_name[:tag]>

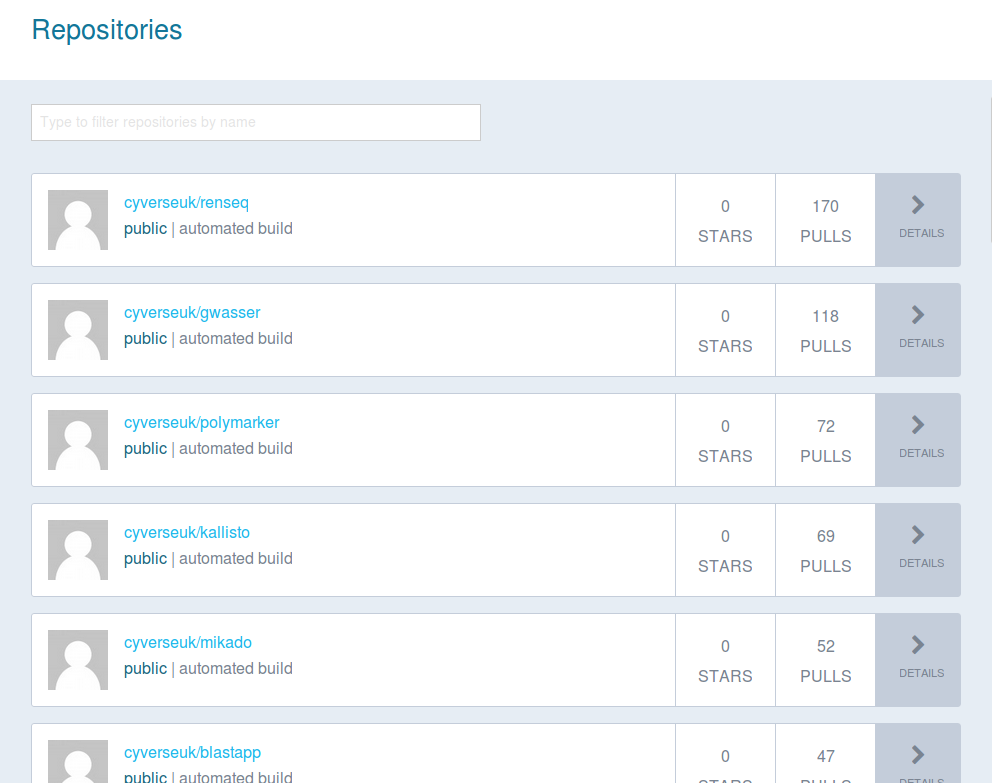

CyVerseUK Docker images can be found under the cyverseuk organization.

We are using automated build, that allows to trigger a new build every time the linked GitHub repository is updated.

Another useful feature of the automated build is to publicly display the DockerFile, allowing the user to know exactly how the image was built and what to expect from a container that is running it. GitHub README.md file is made into the Docker image long description.

For CyVerseUK images when there is a change in the image, a new build with the same tag as the GitHub release is triggered to keep track of the different versions. At the same time also an update of the :latest tag is triggered (you need to manually add a rule for this to happen, it's not done automatically).

Known problems with automate built: for very big images the automate built will fail (e.g. cyverseuk/polymarker_wheat ~10G) due to a timeout. This works fine by command line. Also in the future we won't need this kind of images (it was basically incorporating a volume) as the storage system will store common data to transfer to Condor.

If running a container locally we often want to run it in interactive mode:

docker run -ti <image_name>

If the interactive mode is not needed don't use the -i option.

In case the image is not available locally, Docker will try to download it from the DockerHub register.

To use data available on our local machine we may need to use a volume. The -v <source_dir>:<image_dir> option mounts source_dir in the host to image_dir in the docker container. Any change will affect the host directory too.

It is possible to stop and keep using the same container in a second time as:

docker start <container_name>

docker attach <container_name>

It is possible to build a Docker image interactively instead of writing a Dockerfile. This is not the best practice in production as it doesn't provide documentation and automation between GitHub and DockerHub. Nevertheless it may be useful for testing, debugging or private use.

The user has to run a container interactively (the base image to use is up to them):

docker run --name container_name -ti ubuntu:16.04

The --name option allow the user to name the container, so that it's easier to refer to it later.

Once in the interactive session in the container the user can run all the commands they want (installing packages, writing script and so on). Let's say we want to our new image to provide vim.

root@ID:/# apt-get update && apt-get install vim

Then we exit the container:

root@ID:/# exit

Now we can list all the container:

docker container ls -a

The command will return something similar to the following:

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

1a3aa61f6bc2 ubuntu:16.04 "/bin/bash" 2 minutes ago Exited (0) About a minute ago container_name

Now we can commit the container as a new image:

docker commit container_name my_new_image

If we didn't name the container we can use the ID instead. The user is then able to run the new image as usual.

Often you will want to work with or process your data in a Docker container.

There are three types of data storage available in Docker. Here we'll cover only the first two:

- volumes

- bind mounts

- tmpfs mounts

Volumes and bind mounts differs in the fact that, while both store data in the local File System (FS), the former are stored in a FS location managed by Docker, while the latter could be anywhere on the FS. Of course this has implications on security terms and you must think about what's inside the path you are mounting, keeping in mind it could be modified from inside the container.

Both volumes and bind mounts can be mounted using the same syntax:

- with the

--volumeor-vflag:--volume <name of the volume for named volumes | empty for anonymous volumes | path to the file or directory to be mounted for bind mounts>:<path/to/be/mounted/to/in/the/container>[:options, e.g. ro for read-only] - with the

--mountflag, which consists of a list of key=value pairs:There are more options available in this syntax, refer to the official documentation.--mount type=<bind | volume | tmpfs>,source=</path/to/source | name_of_volume>,destination=/path/to/destination,[readonly]

This part has since been modified such that the preferred option is now --mount. Please refer to the official Docker documentation and the above text. We leave the following on Data Volumes here as historical documentation on volumes.

Often you will want to work with or process your data in a Docker container. For our purposes we may need to mount a local directory into a container. The command for doing so is the following:

docker run -v /path/to/local/folder:/volume <image>[:<tag>] Important: default is to mount in read-write mode, so if there is a possibility to delete or corrupt your files make a copy before. (Alternatively there's an option to mount as read-only

:ro) - You can mount multiple data volumes.

-

Data volumes are designed to persist data, independent of the container’s life cycle. Docker therefore never automatically deletes volumes when you remove a container, nor will it “garbage collect” volumes that are no longer referenced by a container.

A Docker data volume persists after a container is deleted. Therefore is possible to create and add a data volume without necessarily link it to a local folder. - It is possible to mount a single file instead of a directory.

- If your final aim is to use the Docker for CyverseUk applications, note that volumes are not supported by HTCondor (it uses instead

transfer_input_files). - To list volumes run the following command:

docker volume ls

-

When writing a Dockerfile it is worth noticing the

sourcecommand is not available as the default interpreter is/bin/sh(and not/bin/bash). A possible solution is to use the following command:/bin/bash -c "source <whatever_needs_to_be_sourced>" -

See all existing containers:

docker ps -aOr in the new syntax:

docker container ls -a -

Remove orphaned volumes from Docker:

sudo docker volume ls -f dangling=true | awk '{print $2}' | tail -n +2 | xargs sudo docker volume rm -

Remove all containers:

docker container -a | awk '{print $1}' | tail -n +2 | xargs docker rmTo avoid accumulating containers it's also possible to run docker with the

--rmoption, that remove the container after the execution. -

Remove dangling images (i.e. untagged): (to avoid errors due to images being used by containers, remove the containers first)

docker image ls -qf dangling=true | xargs docker rmi -

Remove dangling images AND the first container that is using them, if any: (may need to be run more than once)

docker image ls -qf dangling=true | xargs docker rmi 2>&1 | awk '$1=="Error" {print$NF}' | xargs docker rmTo avoid running the above command multiple times I wrote this script (should work, no guarantees).

-

See the number of layers:

docker history <image_name> | tail -n +2 | wc -l -

See the image size:

docker image ls <image_name> | tail -n +2 | awk '{print$(NF-1)" "$NF}'

Other instructions than the ones listed here are available: EXPOSE, VOLUME, STOPSIGNAL, CMD, ONBUILD, HEALTHCHECK. These are usually not required for our purposes, but you can find more informations in the official Docker Documentation.

For previous docker versions ImageLayers.io used to provide the user with a number of functionalities. Badges were available to clearly display the number of layers and the size of the image (this can be very useful to know before downloading the image and running a container if time/resources are a limiting factor). We restored only this last feature with a bash script (ImageInfo) that uses shields.io.

IMPORTANT: You may encounter problems when trying to build a Docker image or connect to internet from inside a container if you are on a local network. From the Docker Documentation:

...all localhost addresses on the host are unreachable from the container's network.

To make it work:

- find out your DNS address

nmcli dev list iface em1 | grep IP4.DNS | awk '{print $2}' -

option 1 (easier and preferred): build the image and run the container with

--dns=<you_DNS_adress>. -

option 2: in the

RUNinstruction re-write the/etc/resolv.conffile to list your DNS as nameserver.

The use of volumes (or Data Volumes Containers) is not enabled (yet????) (would require give permissions to specific folders, also is not clear if it mounts volume as only read -ok- or read and write -not so ok-), to get the same result we need to use

transfer_input_files as from next section.It's also possible that the Docker image has to be updated giving 777 permissions to scripts because of how Condor handle Docker.