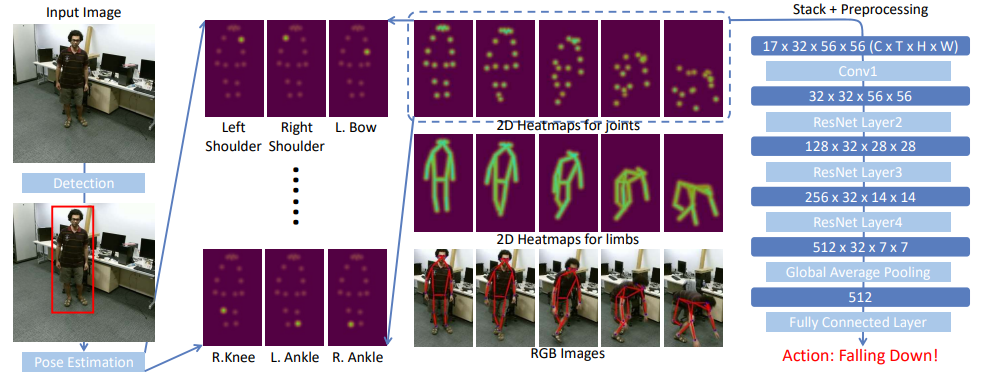

PoseC3D is the first framework that formats 2D human skeletons as 3D voxels and processes human skeletons with 3D ConvNets. We release multiple PoseC3D variants instantiated with different backbones and trained on different datasets.

@article{duan2021revisiting,

title={Revisiting skeleton-based action recognition},

author={Duan, Haodong and Zhao, Yue and Chen, Kai and Lin, Dahua and Dai, Bo},

journal={arXiv preprint arXiv:2104.13586},

year={2021}

}We release numerous weights trained on various datasets and with multiple 3D backbones. The accuracy of each modality links to the weight file.

| Dataset | Backbone | Annotation | Pretrain | Training Epochs | GPUs | Joint Top-1 Config Link: Weight Link |

Limb Top-1 Config Link: Weight Link |

Two-Stream Top1 |

|---|---|---|---|---|---|---|---|---|

| NTURGB+D XSub | SlowOnly-R50 | HRNet 2D Pose | None | 240 | 8 | joint_config: 93.7 | limb_config: 93.4 | 94.1 |

| NTURGB+D XSub | C3D-light | HRNet 2D Pose | None | 240 | 8 | joint_config: 92.7 | limb_config: 92.6 | 93.3 |

| NTURGB+D XSub | X3D-Shallow | HRNet 2D Pose | None | 240 | 8 | joint_config: 92.1 | limb_config: 91.6 | 92.4 |

| NTURGB+D XView | SlowOnly-R50 | HRNet 2D Pose | None | 240 | 8 | joint_config: 96.5 | limb_config: 96.0 | 96.9 |

| NTURGB+D 120 XSub | SlowOnly-R50 | HRNet 2D Pose | None | 240 | 8 | joint_config: 85.9 | limb_config: 85.9 | 86.7 |

| NTURGB+D 120 XSet | SlowOnly-R50 | HRNet 2D Pose | None | 240 | 8 | joint_config: 89.7 | limb_config: 89.7 | 90.3 |

| Kinetics-400 | SlowOnly-R50 (stages: 3, 4, 6) | HRNet 2D Pose | None | 240 | 8 | joint_config: 47.3 | limb_config: 46.9 | 49.1 |

| Kinetics-400 | SlowOnly-R50 (stages: 4, 6, 3) | HRNet 2D Pose | None | 240 | 8 | joint_config: 46.6 | limb_config: 45.7 | 47.7 |

| FineGYM¹ | SlowOnly-R50 | HRNet 2D Pose | None | 240 | 8 | joint_config: 93.8 | limb_config: 93.8 | 94.1 |

| FineGYM¹ | C3D-light | HRNet 2D Pose | None | 240 | 8 | joint_config: 91.8 | limb_config: 91.2 | 92.1 |

| FineGYM¹ | X3D-shallow | HRNet 2D Pose | None | 240 | 8 | joint_config: 91.4 | limb_config: 90.0 | 91.8 |

| UCF101² | SlowOnly-R50 | HRNet 2D Pose | Kinetics-400 | 120 | 8 | joint_config: 86.9 | ||

| HMDB51² | SlowOnly-R50 | HRNet 2D Pose | Kinetics-400 | 120 | 8 | joint_config: 69.4 | ||

| Diving48 | SlowOnly-R50 | HRNet 2D Pose | None | 240 | 8 | joint_config: 54.5 |

Note

-

For FineGYM, we report the mean class Top-1 accuracy instead of the Top-1 accuracy.

-

For UCF101 and HMDB51, we provide the checkpoints trained on the official split 1.

-

We use linear scaling learning rate (

Initial LR∝Batch Size). If you change the training batch size, remember to change the initial LR proportionally. -

Though optimized, multi-clip testing may consumes large amounts of time. For faster inference, you may change the test_pipeline to disable the multi-clip testing, this may lead to a small drop in recognition performance. Below is the guide:

test_pipeline = [ dict(type='UniformSampleFrames', clip_len=48, num_clips=10, test_mode=True), # Change `num_clips=10` to `num_clips=1` dict(type='PoseDecode'), dict(type='PoseCompact', hw_ratio=1., allow_imgpad=True), dict(type='Resize', scale=(64, 64), keep_ratio=False), dict(type='GeneratePoseTarget', with_kp=True, with_limb=False, double=True, left_kp=left_kp, right_kp=right_kp), # Change `double=True` to `double=False` dict(type='FormatShape', input_format='NCTHW_Heatmap'), dict(type='Collect', keys=['imgs', 'label'], meta_keys=[]), dict(type='ToTensor', keys=['imgs']) ]

You can use the following command to train a model.

bash tools/dist_train.sh ${CONFIG_FILE} ${NUM_GPUS} [optional arguments]

# For example: train PoseC3D on FineGYM (HRNet 2D skeleton, Joint Modality) with 8 GPUs, with validation, and test the last and the best (with best validation metric) checkpoint.

bash tools/dist_train.sh configs/posec3d/slowonly_r50_gym/joint.py 8 --validate --test-last --test-bestYou can use the following command to test a model.

bash tools/dist_test.sh ${CONFIG_FILE} ${CHECKPOINT_FILE} ${NUM_GPUS} [optional arguments]

# For example: test PoseC3D on FineGYM (HRNet 2D skeleton, Joint Modality) with metrics `top_k_accuracy` and `mean_class_accuracy`, and dump the result to `result.pkl`.

bash tools/dist_test.sh configs/posec3d/slowonly_r50_gym/joint.py checkpoints/SOME_CHECKPOINT.pth 8 --eval top_k_accuracy mean_class_accuracy --out result.pkl