| layout | title | nav_order |

|---|---|---|

default |

Security |

20 |

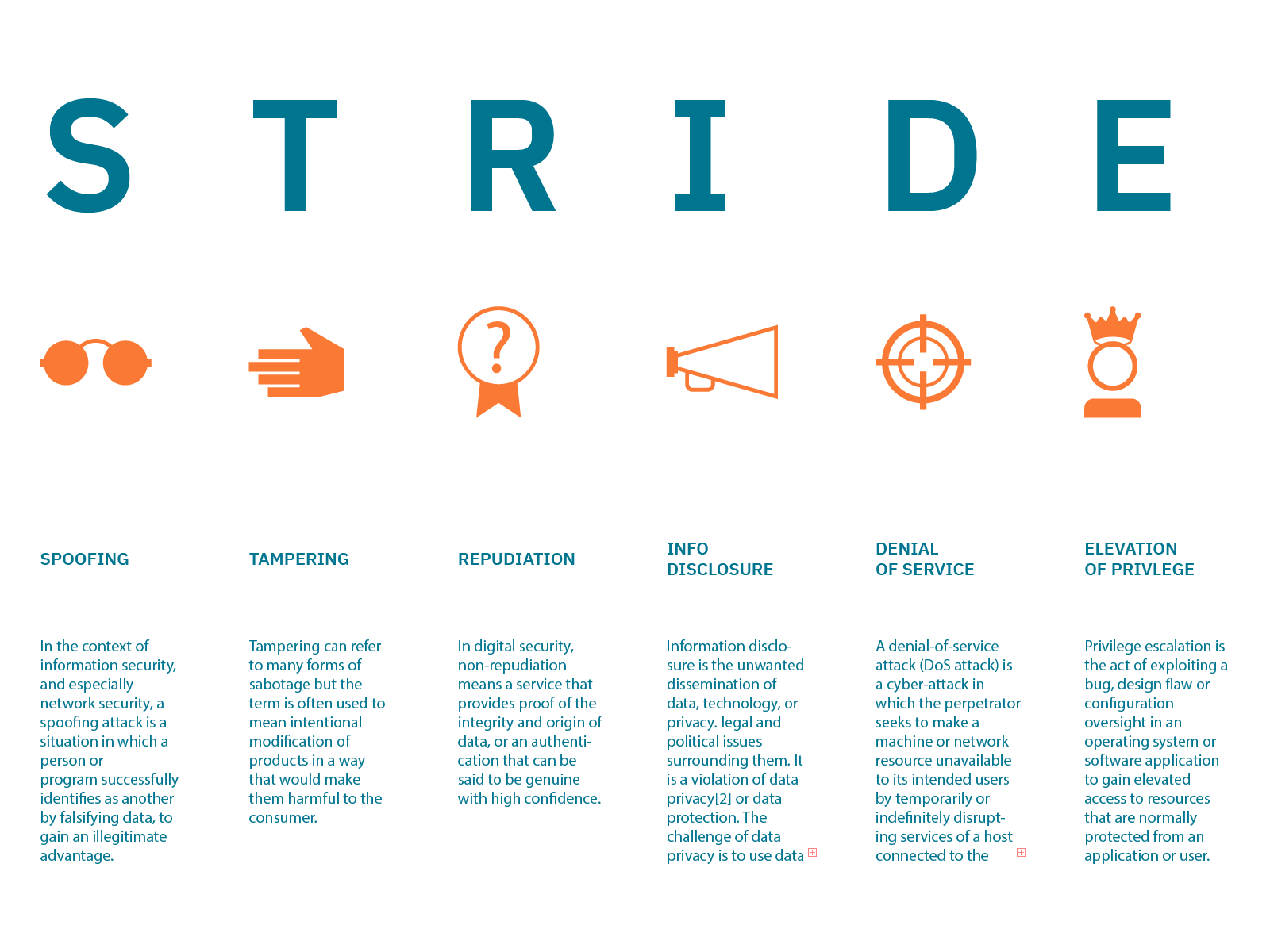

Generally, AI systems at their core are IT systems, therefore they're threatened by the same security risk / issues as any other IT system. As a first step it is recommended to think about the potential threads for the system. The STRIDE model helps doing that:

Depending on the individual threats to the system the IBM Secure Engineering Framework can be used to mitigate these threats and their associated risk.

- IBM Secure Engineering Portal

- IBM Secure Engineering Framework

- IBM Architecture Center - Application Security

- IBM Architecture Center - Data Security

- IBM Architecture Center - Secure DevOps

Obviously there some key differences between AI and regular IT systems and the same is true security risks. The following article gives an introduction into the ML specific security risks - Security Attacks: Analysis of Machine Learning Models

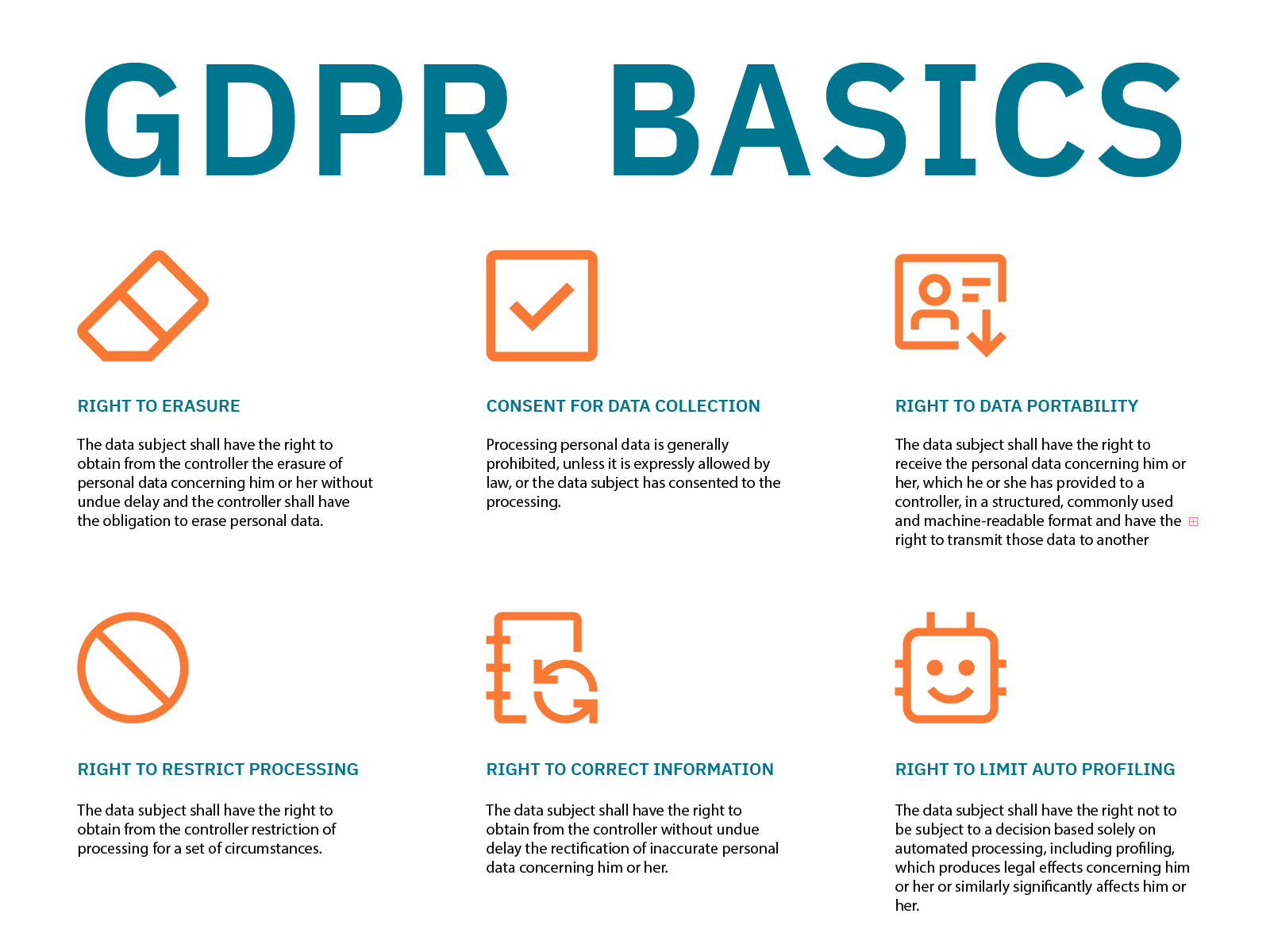

Most AI systems are very "data-hungry" and this might create a conflict with GDPR or CCPA. To prevent any privacy issues later breaking the whole system, it is recommended to build in privacy by design. The cheat sheet below helps you when you design your AI systems:

Important: GDPR does not only cover personal data but also business sensitive data!